HIPAA Compliance Checklist for 2025

If you ask most CIOs or CISOs today, “What AI tools are employees using across the company?” you’ll likely get a pause.

In fact, according to McKinsey, 70%+ of companies lack full visibility into AI usage, as employees rapidly adopt tools without formal approval or tracking.

That means AI tool discovery, AI usage tracking, and AI governance have become mission-critical, not “nice to have.”

Let’s break down why companies are struggling, the risks involved, and how enterprises can finally regain visibility.

TL;DR

- 70% of companies don’t know which AI tools employees are using.

- AI adoption is instant and invisible, fueling Shadow AI risk.

- Lack of visibility leads to compliance gaps, security exposure, and wasted spend.

- Enterprises need automated discovery, centralized inventories, and usage monitoring to stay in control.

- Platforms like CloudEagle.ai make AI oversight real by detecting tools, tracking access, scoring risk, and preparing for audits.

1. What Are AI Tools and Why Companies Struggle to Identify Them?

AI tools are software applications, algorithms, or platforms built to perform tasks traditionally requiring human intelligence, such as reasoning, learning, problem-solving, perception, and language understanding.

They leverage artificial intelligence and machine learning to analyze data, automate workflows, generate insights, and support faster, more informed decision-making.

The problem?

AI is no longer a single platform; it’s embedded everywhere, often without IT even knowing.

Companies struggle to identify AI tools because:

- Employees adopt them instantly (no procurement cycle)

- Many AI apps don’t appear in SSO logs

- AI features are quietly added to existing SaaS apps

- Traditional SaaS tracking tools don’t detect AI-specific risks

As a result, most organizations have Shadow AI, AI tools used without monitoring or approval.

2. The Challenge of Identifying AI Tools in Enterprises

Identifying AI tools inside enterprises is a layered challenge that spans technical, organizational, and ethical concerns.

The biggest obstacle is volume. There are countless tools in the market, and determining which ones fit business needs, integrate with existing systems, and align with policies adds significant complexity.

a. Rapid self-service adoption

AI tools can be adopted in seconds, with no procurement barrier and no training required, making experimentation effortless but invisible.

- Employees start using AI to automate tasks

- Teams experiment with tools outside IT visibility

- Credit card signups bypass procurement altogether

- No centralized tracking

This isn’t a fringe problem; studies show nearly 60% of AI/SaaS tools operate outside IT oversight, meaning teams are deploying technology that leadership can’t see or govern.

b. No centralized tracking

Even when companies try to monitor apps, traditional systems miss AI usage. SSO logs, asset lists, and vendor records fail to capture browser-based tools, embedded AI features, or freemium signups.

Without a unified AI visibility platform, blind spots grow.

- SSO logs don’t show AI usage

- Browser extensions hide AI integrations

- AI features inside SaaS tools go undetected

When usage isn’t tracked, risk balloons silently in the background.

c. Teams experimenting without approvals

Because AI feels exploratory and harmless, departments adopt tools without security and compliance oversight.

What begins as testing evolves into operational dependency, but with zero safeguards.

- Marketing tries new AI content tools

- Engineering plays with AI coding assistants

- HR uses AI resume analyzers

This creates a silent explosion of unmanaged AI growing beyond the IT scope.

3. Risks of Not Knowing What AI Tools Are Used

When AI usage is invisible, risks multiply quickly, from data exposure and compliance violations to wasted budgets and breach pathways.

a. Compliance and data exposure

Sending sensitive data into unvetted AI systems can instantly violate major regulations.

- SOC2

- GDPR

- HIPAA

- PCI

- ISO 27001

Gartner warns that unmanaged AI creates unprecedented data exposure risk, especially without governance.

b. Security vulnerabilities

Shadow AI becomes an attack surface that security cannot manage. Unknown systems process confidential data without enforcement or auditability.

- No MFA or SSO enforcement

- Unknown data retention policies

- No audit logs

- No vendor risk assessments

This is how breaches happen, in tools no one knew existed.

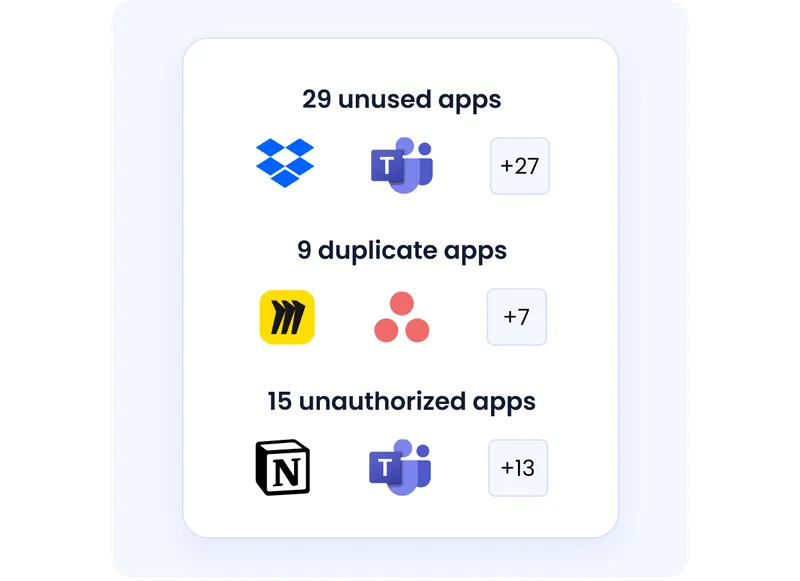

c. Redundant licenses & financial waste

Unmonitored AI leads to unchecked spending as departments independently subscribe to overlapping tools.

- Duplicate AI tools across functions

- Auto-renewals no one tracks

- Unused AI seats or credits

AI visibility projects consistently reveal shockingly high waste; 40–60% of AI spend comes from duplicate or under-used apps that no one realized existed.

4. How Enterprises Can Gain Visibility into AI Tools

Even without an AI visibility platform, organizations can begin to regain control by modernizing discovery and monitoring processes.

a. Automated AI discovery

Manual audits fail because AI evolves too fast. Continuous discovery is the only scalable path to visibility.

- Browser-level discovery

- Network and OAuth monitoring

- Expense and invoice parsing for AI vendors

- Continuous scanning for new AI tools

If AI adoption is instant, discovery must be continuous.

b. Centralized inventory management

Once discovered, tools must be tracked in a single platform, or governance collapses into spreadsheets.

- AI tool categories (generative AI, copilots, niche LLMs)

- Ownership and vendor metadata

- User-level access

- AI feature-level visibility inside SaaS apps

Without centralization, oversight remains manual, reactive, and incomplete.

c. Monitoring usage & spend impact

AI pricing models make spending unpredictable, and tracking consumption prevents budget leaks and portfolio sprawl.

- Track seat-level consumption

- Identify unused AI licenses

- Detect credit exhaustion patterns

- Benchmark spend vs usage

Monitoring usage turns Shadow AI cost into measurable, manageable spend.

5. How CloudEagle.ai Helps Manage and Govern AI Tools

CloudEagle.ai provides a complete governance framework for the fast-growing sprawl of AI tools (ChatGPT, DeepSeek, Midjourney, Jasper, etc.), ensuring IT, Security, and Procurement teams maintain visibility, compliance, and cost control, without slowing innovation.

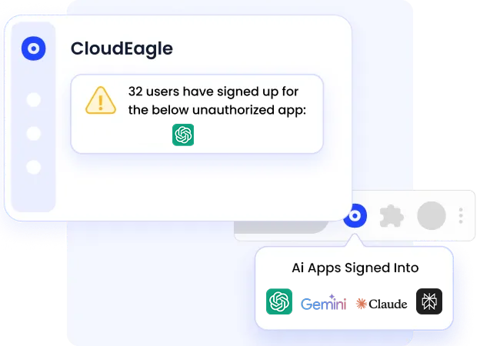

1. AI Tool Discovery — Visibility into Every AI App

Most AI tools operate completely outside SSO and IT oversight.

CloudEagle.ai identifies all AI tools employees log into, whether free, paid, or used personally.

How CloudEagle discovers AI tools:

- Login data (GSuite, Microsoft, Okta)

- Browser activity + extensions

- Credit card spend for AI subscriptions

- Cross-verification with finance systems

According to the IGA Report, 60% of AI/SaaS apps operate outside IT visibility today, increasing risk and overspend.

Why it matters

- Detect unauthorized use of ChatGPT, DeepSeek, Midjourney, Perplexity, and more

- Flag AI-powered Chrome extensions

- Surface AI tools before they become enterprise-wide risks

2. Risk Scoring & Shadow AI Governance

CloudEagle.ai builds a Shadow AI Risk Scorecard to classify risk by:

- Data sensitivity

- Access type (admin, user, API)

- Department

- Frequency of usage

- Integration with other systems

This helps IT decide which tools to:

- Approve

- Block

- Require DLP review

- Route through procurement

70% of CIOs flagged AI as a top security risk in the IGA report.

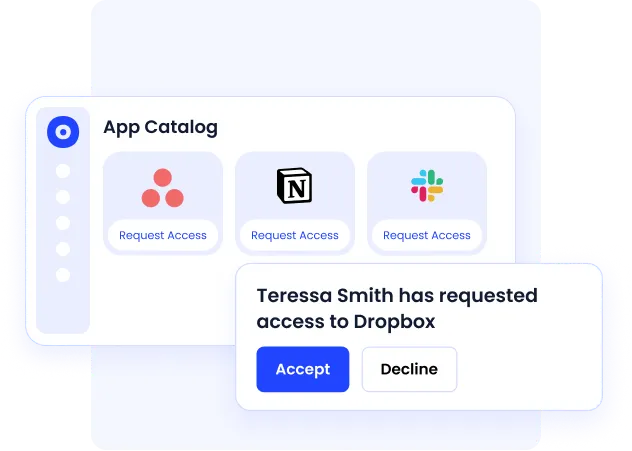

3. Centralized App Catalog with AI-Specific Controls

CloudEagle’s Employee App Catalog acts as a controlled gateway for employees requesting AI tools.

Features include:

- Role-based visibility (only AI tools approved for their job function appear)

- Auto-approval or multi-stage approval based on sensitivity

- Required security questionnaires for high-risk AI apps

- IT, Security, and Legal workflows integrated

This replaces ad-hoc Slack requests and unmanaged free trials.

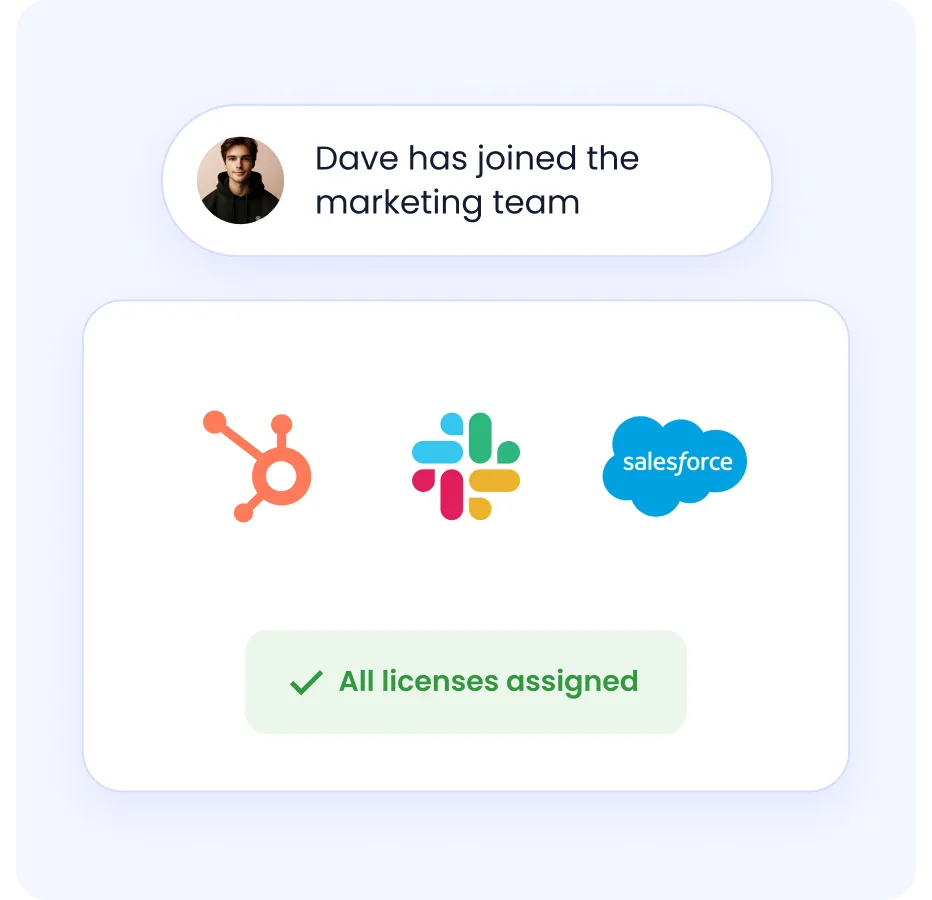

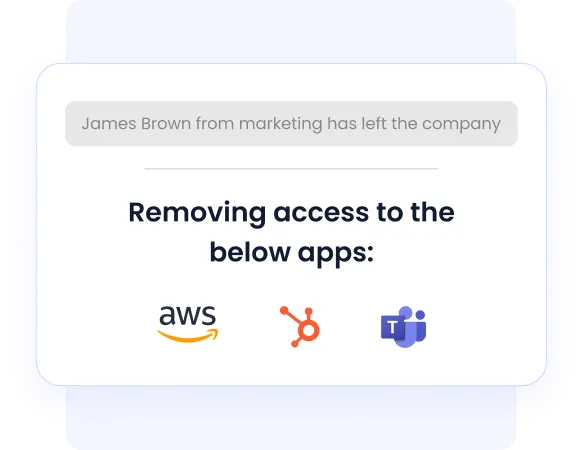

4. Automated Provisioning & Deprovisioning for AI Apps

Even AI tools not behind the IDP can be automatically provisioned or deprovisioned through CloudEagle.ai

CloudEagle.ai manages:

- Admin vs user access

- Feature-level access

- Removal of stale access

48% of ex-employees retain access to business apps (AI included), per the IGA report.

CloudEagle.ai solves this via:

- HRIS + ITSM triggers

- Role-based app bundles

- Auto-offboarding across all tools

- Deprovisioning logs for audits

5. Access Reviews — AI Tools Included

Most companies perform quarterly or annual access reviews, and 95% do them manually.

CloudEagle.ai automates AI access reviews:

- Identify who has access to which AI tools

- Flag overprivileged or admin users

- Detect privilege creep

- Run continuous or event-based reviews

- Export SOC2-ready audit logs

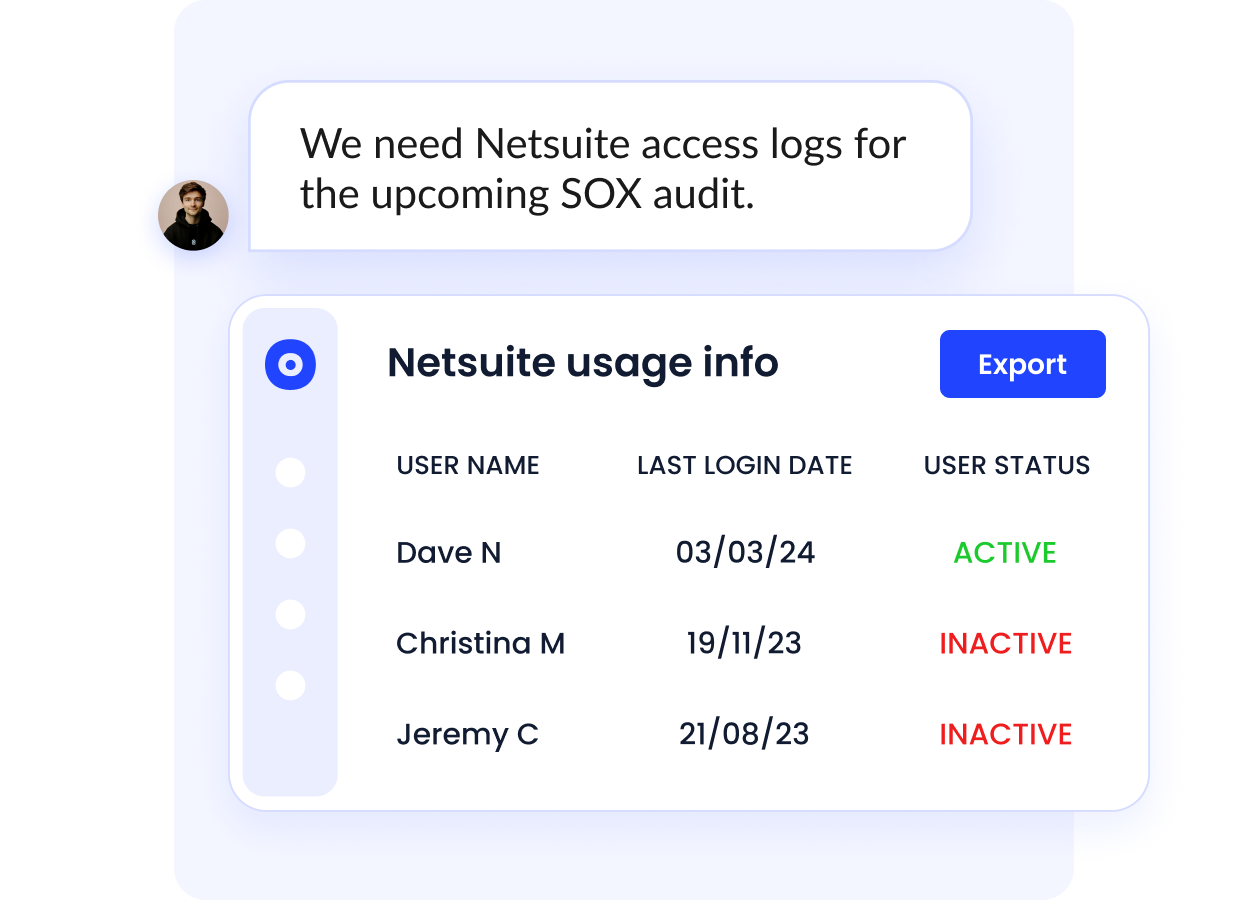

6. Audit Readiness & Compliance for AI Tools

CloudEagle.ai keeps organizations audit-ready by:

- Maintaining a full audit trail of who accessed which AI tools

- Showing when access was granted, elevated, or removed

- Providing SOC2-ready exports

- Linking every AI app to approvals and usage evidence

This addresses critical SOC2, ISO, SOX, and GDPR requirements.

6. Conclusion

AI innovation is moving faster than IT and security teams can track. That’s why AI tool discovery, AI usage tracking, and centralized visibility are now essential, not optional. Without them, organizations expose themselves to data risk, compliance violations, and runaway costs.

Enterprises that proactively map their AI landscape build safer, more cost-efficient AI adoption strategies, and avoid becoming part of the 70% that can’t answer a simple question: What AI tools are we using?

CloudEagle.ai delivers this visibility by detecting Shadow AI, tracking access, governing approvals, and preparing enterprises for audits, making AI oversight operational instead of reactive. It gives CIOs, CISOs, Finance, Procurement, and Security one control plane to manage AI responsibly.

Book a free demo today to see how CloudEagle.ai brings clarity to AI chaos.

7. FAQ

1. How can companies track what AI tools employees are using?

By using automated AI discovery systems that scan network activity, OAuth connections, browser extensions, expenses, and user authentication logs to detect every AI tool in use.

2. What is an AI visibility platform, and how does it work?

An AI visibility platform centralizes discovery, usage tracking, access monitoring, and spend analysis by continuously scanning for AI tools across SaaS apps, devices, and user activity.

3. What is Shadow AI, and why is it dangerous?

Shadow AI refers to unapproved AI tools used without IT oversight. It’s dangerous because it exposes organizations to data leaks, compliance violations, and uncontrolled financial risk.

4. How does AI governance differ from SaaS governance?

SaaS governance manages access, spend, and app inventory. AI governance adds oversight for data exposure, model risk, AI outputs, compliance risk, and ethical use guidelines.

5. How can businesses monitor the actual usage of AI tools?

Through AI usage tracking systems that measure seat activity, API calls, credit consumption, user behavior, and cost impact across all AI tools—approved and unapproved.

%201.svg)

.avif)

.avif)

.avif)

.png)