Govern AI Adoption Without Slowing Innovation

Bring visibility and control to AI usage across your organization. Detect shadow AI early, guide safe adoption, and ensure identity and access risks stay contained as AI usage scales.

Enable Safe AI Adoption Without Losing Control

Imagine: Employees start using AI tools faster than policies can keep up. Some use approved assistants, others experiment with public AI tools, and IT has no clear way to guide usage at the moment access happens.

Without CloudEagle

With CloudEagle

Govern AI Use Before It Becomes a Risk

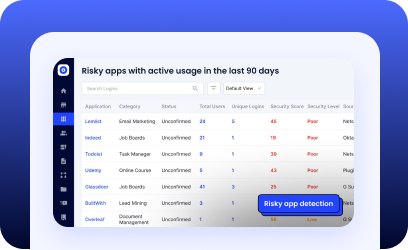

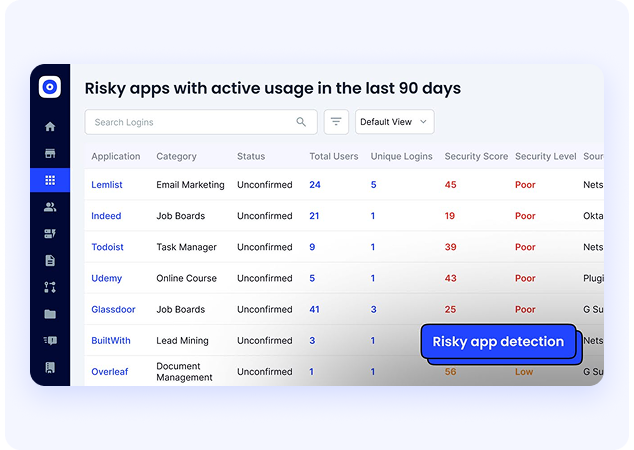

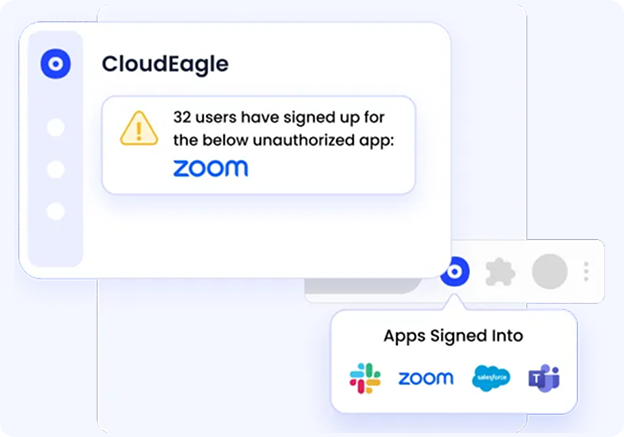

Discover Shadow AI Across the Enterprise

- Maintain an inventory of AI apps used with CloudEagle’s proprietary SaaSMap

- Gain visibility into AI usage by comparing browser plugin, Zscaler, and Crowdstrike logs

- See all AI apps in use across the enterprise in one place

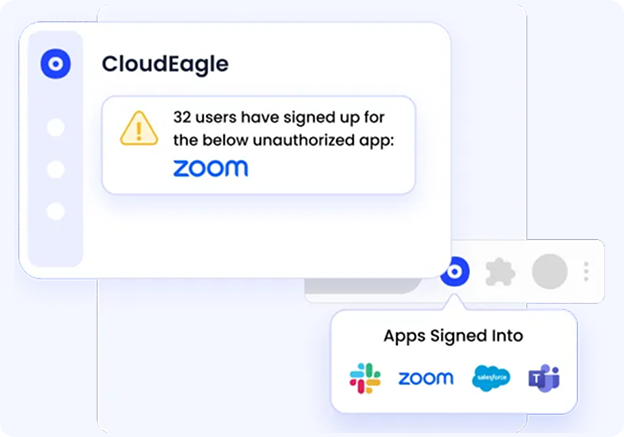

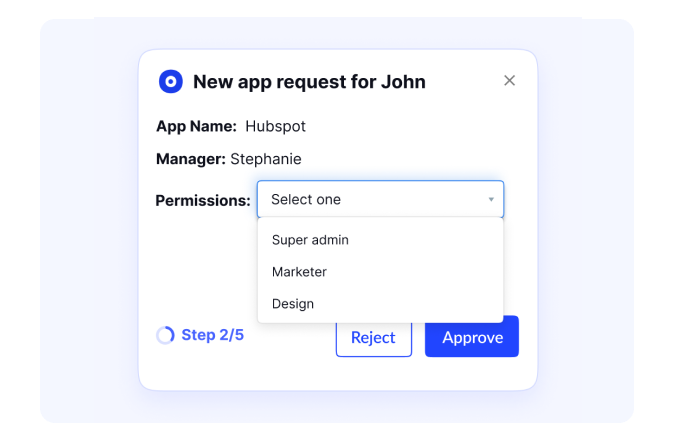

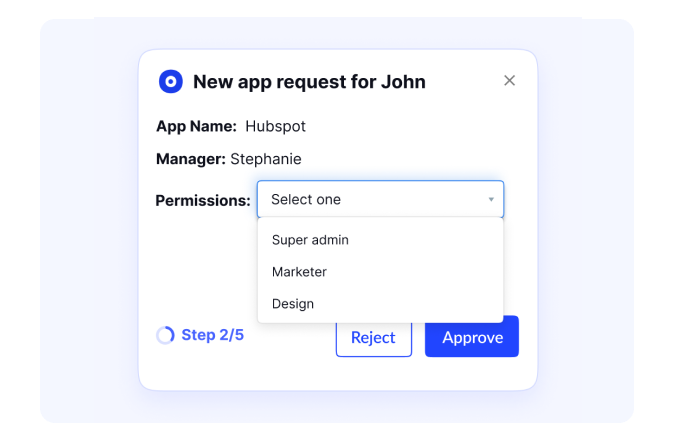

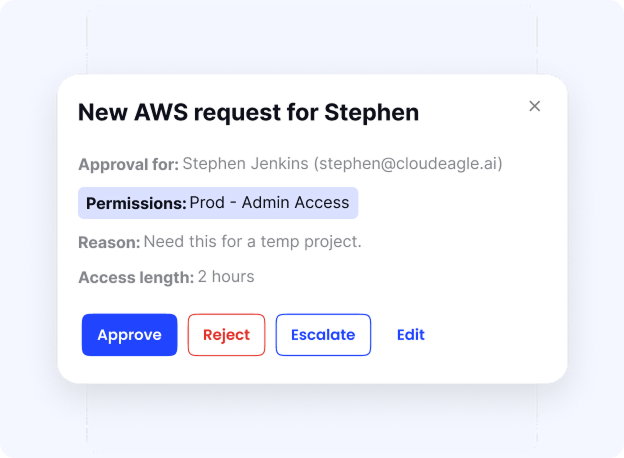

Control AI Usage Without Blocking Productivity

- Control AI usage by maintaining a list of approved AI and SaaS tools

- Prevent unsafe AI usage by displaying a flash page when unapproved AI apps are accessed

- Guide users to safe AI usage policies and the approved AI app they should log into

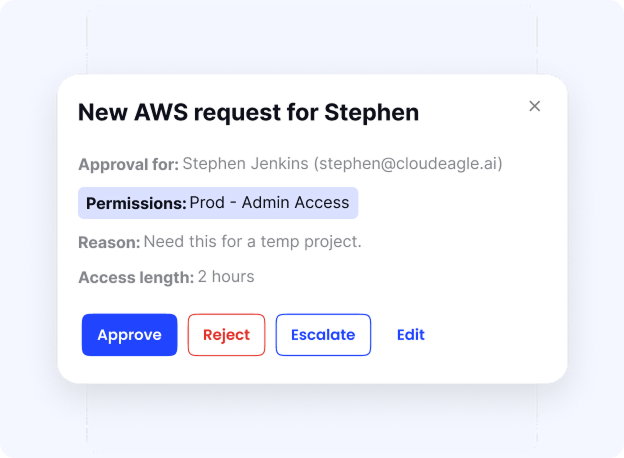

Reduce Risks Introduced by AI

- Reduce exposure from unapproved and unmanaged AI applications

- Limit risky AI access by ensuring usage aligns with approved tools and policies

- Provide oversight needed to address AI risk before it becomes a compliance issue

Maintain Control as AI Features Quietly Appear Inside SaaS

- Stay aware as AI capabilities are enabled inside existing SaaS applications

- Understand where business data may flow through AI-powered features

- Apply consistent governance even when AI is embedded, not obvious

Optimize All Your SaaS and AI with One AI-Powered Platform

Frequently Asked Questions

AI governance ensures AI tools are used safely, responsibly, and in line with security, compliance, and risk policies

Employees adopt AI tools faster than IT can review them, creating data, identity, and compliance risks.

AI introduces new risks around data usage, model behavior, and identity misuse that require deeper controls.

AI usage control governs how AI tools are accessed, what data they process, and who can use them.

Over-permissioned users and unmanaged identities can expose sensitive data through AI tools.

Yes. Governance enables controlled adoption instead of blocking AI outright.

It provides visibility, policy enforcement, and audit-ready evidence as regulations evolve.

AI features inside approved tools may process sensitive data without explicit visibility or approval.

It unifies AI discovery, usage control, identity risk, and governance into one operational platform.

As soon as AI tools appear in the environment. So governance is most effective when it starts early.