AI Governance Platform

Govern AI Adoption Without Slowing Innovation

Without centralized governance, organizations face growing exposure across data leakage, identity misuse, and compliance gaps.

Built-In AI Governance From Day Zero

Unified Visibility Across AI, SaaS, and Identity

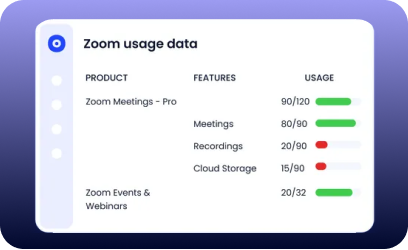

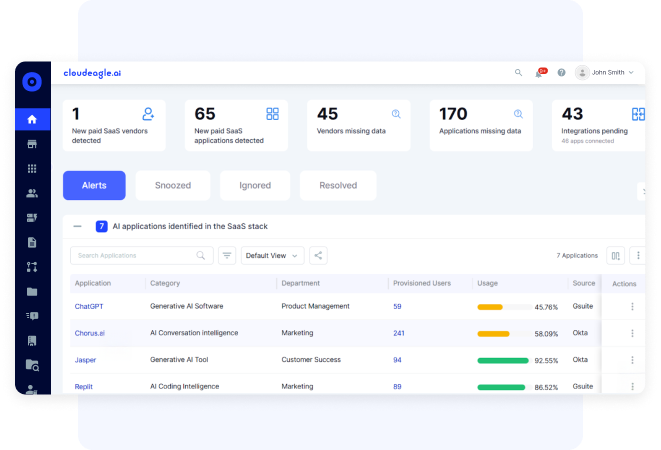

AI apps, embedded AI features, and access patterns are visible in one place, eliminating blind spots across teams and tools.

Proactive Risk Control, Not Reactive Cleanup

Risky AI usage, unsafe access patterns, and shadow adoption are flagged early before incidents, audits, or compliance reviews force action.

Governance That Scales With AI Adoption

AI governance evolves from manual reviews to a repeatable operating model that adapts as new tools and use cases emerge.

Audit-Ready Oversight Without Friction

Govern AI Use Before It Becomes a Risk

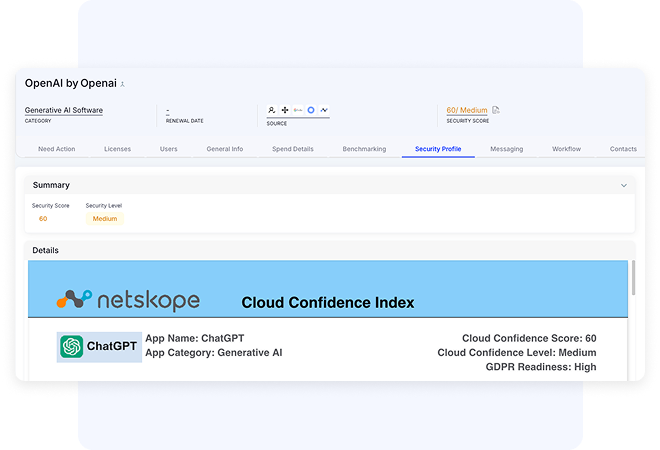

Discover Shadow AI Across the Enterprise

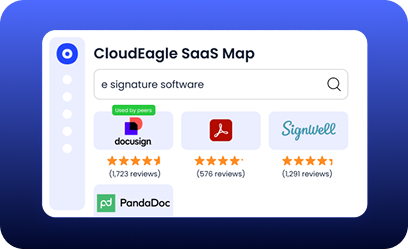

- Maintain an inventory of AI apps used with CloudEagle’s proprietary SaaSMap

- Gain visibility into AI usage by comparing browser plugin, Zscaler, and Crowdstrike logs

- See all AI apps in use across the enterprise in one place

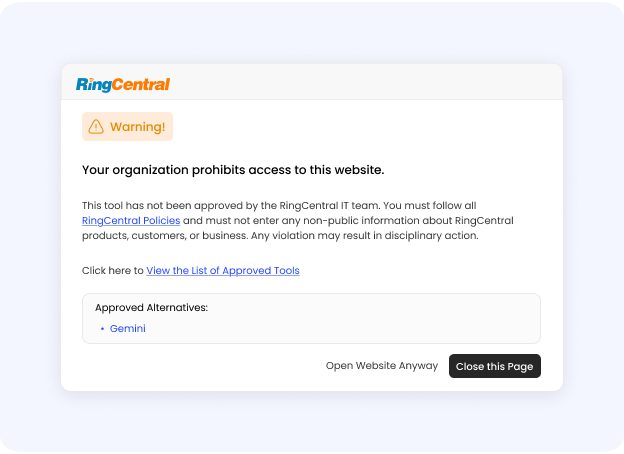

Control AI Usage Without Blocking Productivity

- Control AI usage by maintaining a list of approved AI and SaaS tools

- Prevent unsafe AI usage by displaying a flash page when unapproved AI apps are accessed

- Guide users to safe AI usage policies and the approved AI app they should log into

Reduce Identity Risk Introduced by AI

- Ensure AI access aligns with current roles, not historical permissions

- Limit exposure from over-privileged users interacting with AI tools

- Prevent sensitive actions from being performed through unsafe identities

Relevant resources

Frequently Asked Questions

AI governance ensures AI tools are used safely, responsibly, and in line with security, compliance, and risk policies

Employees adopt AI tools faster than IT can review them, creating data, identity, and compliance risks.

AI introduces new risks around data usage, model behavior, and identity misuse that require deeper controls.

AI usage control governs how AI tools are accessed, what data they process, and who can use them.

Over-permissioned users and unmanaged identities can expose sensitive data through AI tools.

Yes. Governance enables controlled adoption instead of blocking AI outright.

It provides visibility, policy enforcement, and audit-ready evidence as regulations evolve.

AI features inside approved tools may process sensitive data without explicit visibility or approval.

It unifies AI discovery, usage control, identity risk, and governance into one operational platform.

As soon as AI tools appear in the environment. So governance is most effective when it starts early.