HIPAA Compliance Checklist for 2025

AI tools have exploded across every corner of the enterprise, turbocharging productivity and innovation, but they’re also slipping past IT’s watchful eyes.

This “Shadow AI” phenomenon is no longer a fringe issue: over half of employees use AI tools without IT approval, and nearly 40% admit to sharing sensitive data with unsanctioned platforms.

Why? Because AI is fast, easy, and fills urgent gaps that official systems can’t keep up with. But this convenience comes with a hidden cost. Sensitive data can leak in seconds, compliance risks multiply, and unchecked AI outputs may introduce costly errors or bias.

The real challenge: a single unauthorized upload of proprietary or customer data can lead to severe regulatory penalties and damage your brand’s reputation.

To address this challenge, enterprises must go beyond simply blocking AI websites or issuing warnings. They need effective AI governance strategies that balance innovation with control, and that starts with visibility powered by advanced AI detection tools.

Let’s explore how enterprises can implement effective AI governance strategies that balance innovation with control.

TL;DR

- Advanced AI detection tools are essential for enterprises to detect AI usage across their environments, including unauthorized or shadow AI applications.

- Leveraging AI detector free solutions can provide initial visibility and help organizations identify risky AI tool adoption before investing in comprehensive governance platforms.

- Sensitive data is being shared with unsanctioned tools, increasing the risk of data leaks and compliance violations.

- Traditional DLP and SaaS security tools are not equipped to detect AI-specific risks.

- Enterprises must embrace solutions like CloudEagle.ai that help discover hidden AI tools, enforce policies, and centralize SaaS governance

What is Shadow AI?

Shadow AI refers to employees using AI tools without approval or oversight from the IT or security team. These tools are often used to speed up tasks such as writing emails, generating reports, or coding. While they can improve productivity, they also introduce significant risks.

Since shadow AI tools are not officially managed by the company, they can lead to data leaks, privacy violations, or compliance issues. For instance, if an employee enters confidential business data into an external AI tool, that data might be stored or reused by the provider, often without the organization’s knowledge.

Shadow AI is a subset of “shadow IT,” but specifically focuses on unauthorized use of AI tools. To stay secure, companies must implement clear AI usage policies, enforce access control, and maintain oversight of AI tool adoption. It's about balancing innovation with responsible governance.

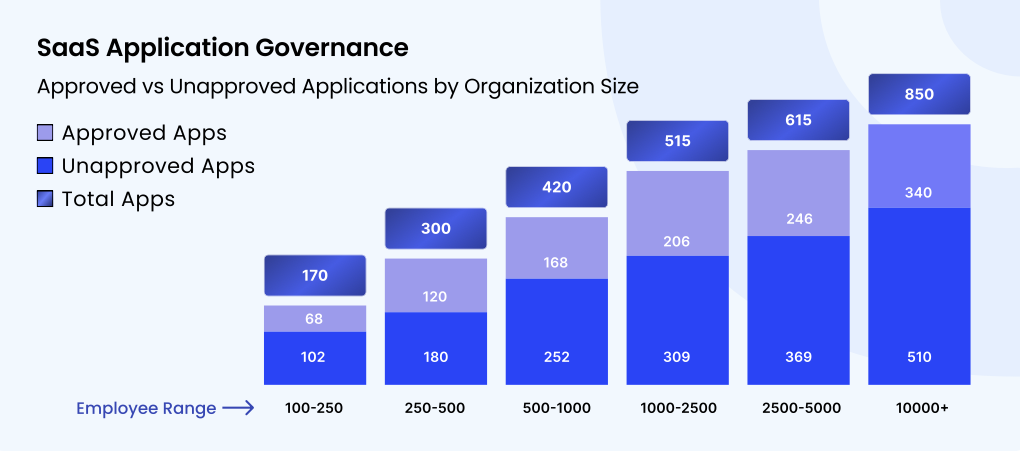

According to CloudEagle.ai’s IGA Report, 60% of AI and SaaS applications operate outside IT visibility. This makes it critical for IT teams to regain control before these unmanaged tools lead to larger security or compliance risks.

Why AI Tools Are Slipping Past IT Controls?

According to a recent ManageEngine report, 60% of employees are using unapproved AI tools more than last year, while 63% of IT leaders cite data leakage as the top risk. The following are the key reasons why AI tools are slipping past IT controls:

Proliferation of Freemium AI Tools

Freemium and low-cost AI tools flood the market, making it easy for employees to adopt them without IT approval. IDC’s Global Employee Survey found that 39% of EMEA employees use free AI tools at work, with an additional 17% using privately paid AI tools.

This democratization accelerates innovation but also fuels Shadow AI growth, as employees bypass official channels for speed and convenience.

Lack of Centralized Visibility

IT teams struggle to gain visibility into which AI tools employees are using. Komprise’s survey revealed that nearly half of IT leaders are “extremely worried” about security and compliance due to shadow AI, yet many lack the tools to monitor AI usage effectively.

Without centralized oversight, unauthorized AI use becomes a blind spot, increasing the risk of data leaks and regulatory violations.

Bring Your Own AI (BYOAI)

Just as BYOD (Bring Your Own Device) transformed enterprise IT, BYOAI is emerging as a new challenge. Employees bring their preferred AI tools, often consumer-grade versions like personal ChatGPT accounts, into the workplace.

Research shows that over 70% of ChatGPT accounts used at work are personal, lacking enterprise-grade security controls. This exposes sensitive data to external platforms without proper safeguards.

Siloed Procurement & Shadow Purchasing

Departments often purchase AI tools independently, bypassing centralized IT procurement. This siloed approach leads to inconsistent security policies and fragmented management. Without coordinated governance, enterprises face sprawling AI tool landscapes that are difficult to secure.

“Shadow AI is evolving from rogue spreadsheets to unauthorized AI accounts, turbocharged by easy access and lack of oversight”. - Brandon Nash, Business Development Manager at Fuel iX

Gaps in Traditional DLP or SaaS Security Policies

Many existing Data Loss Prevention (DLP) and SaaS security policies were not designed to address AI-specific risks. ManageEngine’s report highlights that traditional controls often fail to detect AI-related data exposure, leaving enterprises vulnerable.

“Shadow AI represents both the greatest governance risk and the biggest strategic opportunity in the enterprise.” - Ramprakash Ramamoorthy, Director of AI Research at ManageEngine

As AI usage grows, security frameworks must evolve to include AI governance, monitoring, and user education.

Key Risks of Unmonitored AI Tool Usage

Without proper oversight, enterprises expose themselves to data breaches, compliance failures, soaring costs, and emerging security threats.

Data Leakage & IP Exposure

AI tools often process vast amounts of sensitive information, including proprietary data and intellectual property (IP). According to Metomic’s 2025 AI security report, 73% of enterprises experienced AI-related breaches last year, with an average cost of $4.8 million per incident.

Financial services, healthcare, and manufacturing sectors are particularly vulnerable to attacks like prompt injections and data poisoning that can leak confidential data or corrupt AI outputs.

Shadow AI usage compounds this risk. Fortra highlights that when employees input sensitive data into unauthorized AI tools, they risk exposing personally identifiable information (PII) or proprietary code, which can be inadvertently incorporated into AI training datasets and later leaked.

Compliance Violations (GDPR, HIPAA, SOC 2, etc.)

Non-compliance with data protection regulations is a growing concern as unmonitored AI tools process regulated data. BigID’s 2025 AI Risk & Readiness Report reveals that nearly 55% of enterprises are unprepared for AI regulatory compliance, risking fines and reputational damage as new laws take effect.

Healthcare enterprises face frequent AI data leakage incidents, while financial firms endure the highest regulatory penalties, averaging $35.2 million per AI compliance failure, according to McKinsey.

Uncontrolled Cost Growth

The rapid, decentralized adoption of AI tools can lead to unexpected and uncontrolled expenses. Shadow AI and siloed procurement often mean multiple overlapping subscriptions and underutilized licenses. Without centralized oversight, enterprises risk ballooning costs that are difficult to track or justify.

“The rapid adoption of AI has created a critical security oversight for many enterprises, exposing them to unprecedented risks and hidden costs.” - Dimitri Sirota, CEO of BigID, warns,

Security Threats & Zero-Day Risks

AI introduces new attack vectors that traditional security tools are ill-equipped to handle. Darktrace reports that 74% of cybersecurity professionals consider AI-powered threats a major challenge.

Common threats include adversarial inputs that manipulate AI decisions, prompt injections that exploit AI models, and AI-powered spear phishing that scales social engineering attacks.

The “AI Security Paradox” means the very capabilities that make AI powerful—processing and synthesizing vast datasets—also create vulnerabilities that traditional frameworks can’t fully address.

Model Training Risks

Unsupervised or poorly governed AI training can introduce bias, errors, or data poisoning that degrade model accuracy and trustworthiness. Attackers may corrupt training datasets or insert backdoors, causing AI systems to behave unpredictably or maliciously (BlackFog, 2025).

“Agentic AI systems with autonomy and extensive data access will increasingly attract malicious actors seeking to exploit or manipulate them.” - Etay Maor, Chief Security Strategist at Cato Networks.

How to Regain Control Over Shadow AI

IT and security teams must combine visibility, policy, governance, and employee empowerment to turn Shadow AI from a risk into a managed asset. Here are five key steps, supported by expert insights and industry best practices.

Discover and Map AI Tool Usage

You can't secure what you can't see. The first step is to uncover all AI tools and services being used across the organization, both approved and rogue.

AI discovery tools can scan networks, code repositories, browser extensions, and cloud environments to identify:

- Embedded AI components (e.g., OpenAI APIs in internal apps),

- Freemium AI tools adopted by employees (like ChatGPT, Notion AI, etc.),

- Third-party generative AI tools are connected to internal workflows.

Mend AI, for instance, offers a detection engine that scans codebases and APIs for AI/ML integrations. These insights help security teams build an accurate map of AI activity.

“AI tools boost productivity and are becoming like digital workers, but need oversight to prevent security and rule-breaking issues. The main challenge is making sure employees protect sensitive information when using AI, with proper controls and training in place. With the right safeguards, companies can get AI benefits while keeping data safe and following regulations.” — Paul Heard, Veteran CIO & Technology Advisor.

Routine AI audits—similar to software asset management—can help monitor changes, detect new usage, and maintain compliance.

Create an AI Usage Policy

Policy is your blueprint for control. A clear, well-communicated AI usage policy is essential for setting boundaries and expectations.

Your policy should include:

- Approved AI tools and their intended use cases,

- Data classification rules: What type of data (PII, PHI, financial, proprietary) can or cannot be used with AI tools?

- Approval workflows for requesting access to new tools or features,

- Third-party AI risk disclosures: Educating employees about where their data goes when using external AI tools.

Make sure the policy is shared during employee onboarding and regularly reinforced through training and awareness campaigns.

Update DLP and Security Policies for AI

AI-specific threats—like data leakage through prompts or external model training—require enhanced monitoring.

Traditional Data Loss Prevention (DLP) systems should be updated to:

- Detect outbound data sent to known AI endpoints (e.g., OpenAI, Anthropic, Hugging Face),

- Flag sensitive data embedded in prompts,

- Block AI model usage on unsecured or unsanctioned web platforms.

You can also implement browser-level controls or integrate AI-specific detection into CASBs (Cloud Access Security Brokers) to prevent sensitive data from leaving your secure environment.

Build an AI Governance Committee

AI is not just a technology decision; it’s an enterprise-wide concern. That’s why AI governance should be owned by a committee of stakeholders, including:

- IT and cybersecurity leaders,

- Legal and compliance officers,

- HR and data privacy teams,

- Line-of-business managers.

This team can:

- Continuously review and update AI tool inventories,

- Define AI risk categories and mitigation plans.

- Track evolving regulations like the EU AI Act or the NIST AI Risk Framework,

- Launch employee education initiatives and training modules.

Having a shared governance model ensures balanced, risk-aware decision-making and enables accountability across departments.

Offer Secure, Sanctioned AI Alternatives

Shadow AI exists largely because employees want faster, smarter ways to work, and sanctioned tools often fall short.

Instead of blocking all AI usage, enable it safely:

- Provide internal access to enterprise versions of tools like Microsoft Copilot, Google Workspace AI, or ChatGPT Enterprise.

- Embed generative AI into existing apps via APIs, securely managed and audited.

- Offer a catalog of pre-approved AI tools through your ITSM or SaaS management portal.

According to Forbes, companies that offer approved AI platforms see a 45% drop in unauthorized AI use and a 33% increase in employee satisfaction.

“When IT enables secure, user-friendly AI solutions, employees are far less likely to go rogue.” — Forbes Tech Council, 2025

Educate and Empower Employees on Responsible AI Use

Empowered, well-informed teams not only reduce the chances of data breaches and compliance violations but also foster a culture of ethical AI adoption and innovation.

Run Awareness Campaigns

Regular awareness campaigns help employees understand the importance of responsible AI use, the risks of Shadow AI, and their role in safeguarding sensitive data.

According to Wald.ai, ongoing AI safety audits and risk assessments combined with clear communication significantly enhance trust and ethical AI adoption in enterprises.

McKinsey’s 2025 workplace report also highlights that 71% of employees trust their employers to deploy AI ethically, making leadership-driven awareness campaigns a powerful tool for engagement.

Provide Clear Examples

Providing clear examples of responsible and irresponsible AI use is crucial for helping employees understand the real impact of their actions. For instance, biased AI hiring tools can unintentionally reinforce discrimination, while improper data sharing with AI platforms risks costly compliance violations under regulations like GDPR and HIPAA.

Improper data sharing with AI tools can lead to serious compliance violations. The infamous Facebook-Cambridge Analytica scandal is a prime example, where personal data collected under false pretenses was used to influence political outcomes, resulting in a $5 billion FTC fine for Facebook.

Studies show that transparency in AI decision-making significantly boosts user trust and reduces legal risks. As IBM highlights, explainability is a cornerstone of ethical AI, helping organizations avoid “black box” pitfalls.

Promote a Culture of Transparency

Training employees on ethical and compliant AI usage is not just a best practice—it’s a legal and moral imperative in today’s AI-driven world. Encouraging open dialogue about AI tools and their use fosters a transparent culture where employees feel comfortable reporting concerns or uncertainties. This culture supports ongoing learning and ethical decision-making.

According to TechRadar, integrating ethical AI practices and accountability into company values helps prevent misuse and builds long-term trust with customers and regulators.

Training should also include role-specific guidance, ensuring that employees handling sensitive data or AI-driven decisions understand their unique responsibilities.

How CloudEagle.ai Can Help You Control Shadow AI?

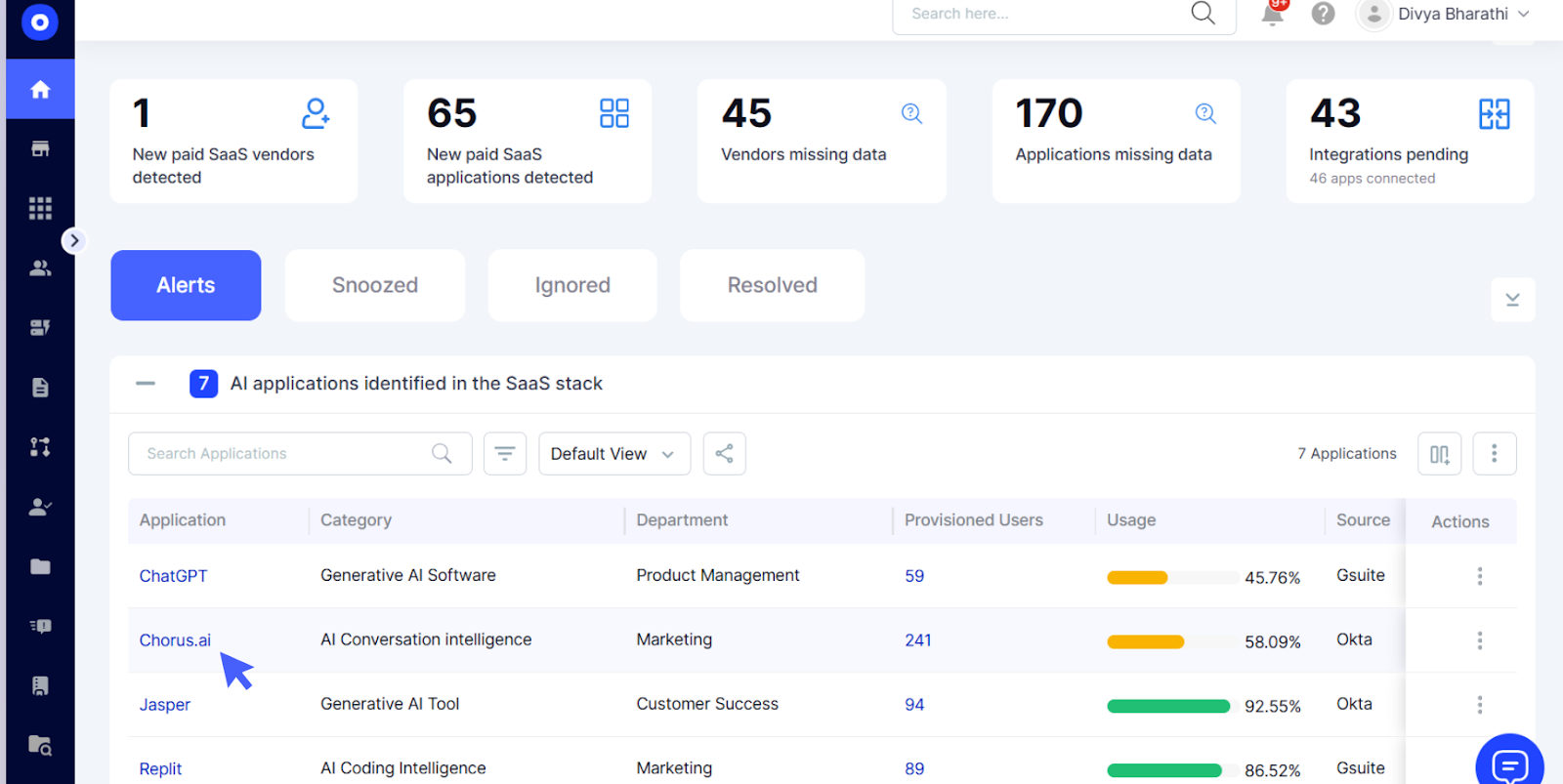

CloudEagle.ai enables enterprises to regain control over Shadow AI by providing comprehensive visibility, risk assessment, and governance across all AI and SaaS tools—helping manage hidden usage, optimize spend, and enforce security policies effectively.

Comprehensive App Discovery: CloudEagle.ai continuously scans your network, SaaS environment, and endpoints to detect all AI tools in use—both approved and unauthorized, including hidden browser extensions and free AI services.

It leverages multiple data sources like network traffic, Single Sign-On (SSO) logs, and expense monitoring to give you a full picture of your AI landscape and reduce hidden risks.

Automated Risk Assessment: The platform automatically evaluates discovered AI tools for security posture and compliance risks, validating vendor certifications such as SOC 2 and reviewing data protection agreements. It scores tools by risk level, helping prioritize remediation and informed decision-making to protect your data and systems.

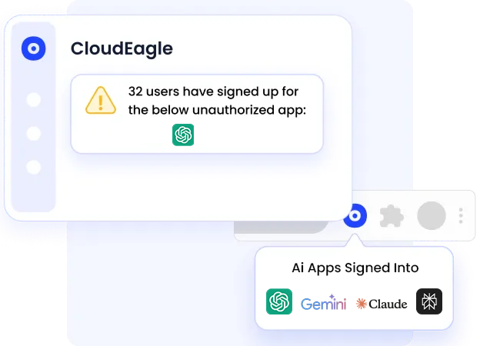

Access Control via SSO and RBAC: Integrating with identity providers like Okta, Azure AD, and Google Workspace, CloudEagle.ai enforces Single Sign-On and Role-Based Access Controls to ensure employees only access vetted AI tools, preventing unauthorized signups with corporate credentials.

Continuous Monitoring and Alerts: CloudEagle.ai sets up real-time alerts for new AI app signups, suspicious usage patterns, or unauthorized browser extensions. This proactive monitoring enables IT and security teams to detect and respond to Shadow AI activities before risks escalate.

“Privilege creep happens when employees retain access they no longer need, quietly piling up over time. Without regular access reviews, these outdated permissions become a hidden threat to security and operational integrity.” — Noni Azhar, CIO, ProService Hawaii.

Spend and Procurement Visibility: By connecting with financial tools such as NetSuite, Expensify, and corporate credit cards, CloudEagle.ai uncovers shadow spend on AI tools, helping optimize costs, prevent redundant purchases, and streamline procurement.

Self-Service App Catalog: CloudEagle.ai offers a centralized, user-friendly catalog where employees can request and access approved AI and SaaS tools securely. This reduces the temptation for shadow adoption while improving operational efficiency and governance.

Automated Identity Governance: CloudEagle.ai automates identity governance, ensuring only the right people can use certain apps. This reduces errors and protects against insider threats. It also helps prevent Shadow AI by stopping employees from using unapproved AI tools, like custom ChatGPT, without permission. This keeps your organization safe and compliant.

Custom Alerts and Reporting: The platform provides detailed reports on AI and SaaS usage, vendor spend, and contract management, enabling continuous optimization and compliance assurance.

By combining these capabilities, CloudEagle.ai empowers organizations to discover, assess, monitor, and govern Shadow AI effectively, turning hidden risks into manageable assets while enabling innovation with confidence.

Conclusion

The rapid proliferation of AI tools has fueled employee productivity and transformed workflows, but it has also introduced a covert threat to IT teams worldwide. Shadow AI is accelerating beyond the reach of traditional IT controls, exposing organizations to significant risks, including data leaks, regulatory non-compliance, and costly security breaches.

Blocking AI websites or issuing warnings is no longer enough. Enterprises must adopt a proactive, comprehensive approach combining visibility, governance, employee education, and secure alternatives to regain control.

As Nidhi Jain, CEO and Founder, CloudEagle.ai, puts it,

“The communication aspect is equally important. Leadership needs to be transparent about how roles will evolve, not disappear. When security professionals understand that AI will handle routine tasks so they can focus on more strategic work, resistance often transforms into enthusiasm.”

The enterprises that succeed will be those that balance innovation with robust governance, empowering teams to harness AI’s potential while safeguarding sensitive data and ensuring compliance.

Ready to take control of Shadow AI in your enterprise?

Schedule a demo with Coudegaleaui and learn how you can implement effective AI governance strategies that protect your data, reduce risks, and unlock AI’s full value.

FAQs

1. What are the tools of AI?

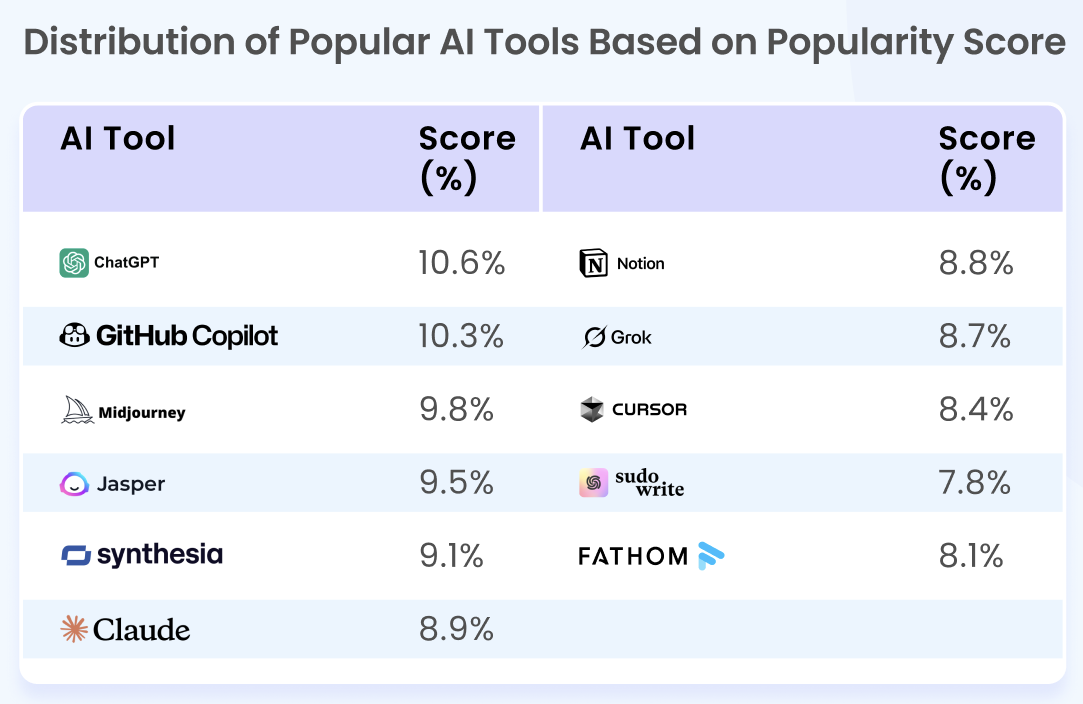

AI tools are software applications that use artificial intelligence to help with tasks such as writing, designing, coding, analyzing data, and automating workflows. Popular categories and examples include:

- AI Assistants: ChatGPT, Gemini, Claude, Grok

- Image Generation: Midjourney, DALL·E 3, Ideogram

- Video Generation: Synthesia, Google Veo, OpusClip

- Writing Tools: Rytr, Sudowrite

- Automation: Zapier, n8n

- Meeting Assistants: Fathom, Nyota

- Design: Canva Magic Studio, Looka

- Coding Tools: GitHub Copilot, Cursor, TabNine

- Search Engines: Perplexity, Google AI Mode

- Voice Generation: ElevenLabs, Murf

2. What are the top 10 generative AI tools?

Here are ten leading generative AI tools in 2025:

- ChatGPT – Conversational AI and text generation

- Gemini – Google’s multimodal AI assistant

- Claude – Advanced text and reasoning AI

- Grok – Conversational AI on X (Twitter)

- Midjourney – AI image generation

- DALL·E 3 – AI image creation by OpenAI

- Synthesia – AI video generation

- OpusClip – Video content creation

- Jasper – AI for marketing content

- GitHub Copilot – AI code completion and generation

3. What are the 4 types of AI tools?

AI tools can be grouped into four main types based on their functionality:

- Analytics Tools: Analyze data and provide insights (e.g., Tableau AI, Deep Research)

- Customer Service & Chatbots: Automate support and communication (e.g., ChatGPT, Gemini)

- Marketing Automation & Personalization: Create and deliver targeted content (e.g., Jasper, AdCreative)

- Productivity & Workflow Automation: Streamline tasks and processes (e.g., Zapier, n8n)

4. What are the 7 main types of AI?

The seven main types of AI, based on their capabilities and development, are:

- Reactive Machines: Respond to current input only (e.g., basic chess engines)

- Limited Memory AI: Learn from historical data (e.g., self-driving cars)

- Theory of Mind AI: Understand emotions and intentions (still in research)

- Self-Aware AI: Possesses consciousness (theoretical, not yet achieved)

- Narrow AI (Weak AI): Perform specific tasks (e.g., Siri, Alexa)

- Artificial General Intelligence (AGI): Human-like intelligence (not yet realized)

- Artificial Superintelligence (ASI): Surpasses human intelligence (theoretical)

5. What is Google AI called?

Google’s main AI is called Gemini. It is a multimodal AI assistant designed for a range of tasks, including text, image, and code generation. Google also refers to its broader AI division as Google AI.

%201.svg)

.avif)

.avif)

.avif)

.png)