HIPAA Compliance Checklist for 2025

AI is moving faster than most companies can control. Agentic AI systems are now making decisions, triggering workflows, and accessing business data on their own, and many enterprises have no idea what their AI is doing behind the scenes.

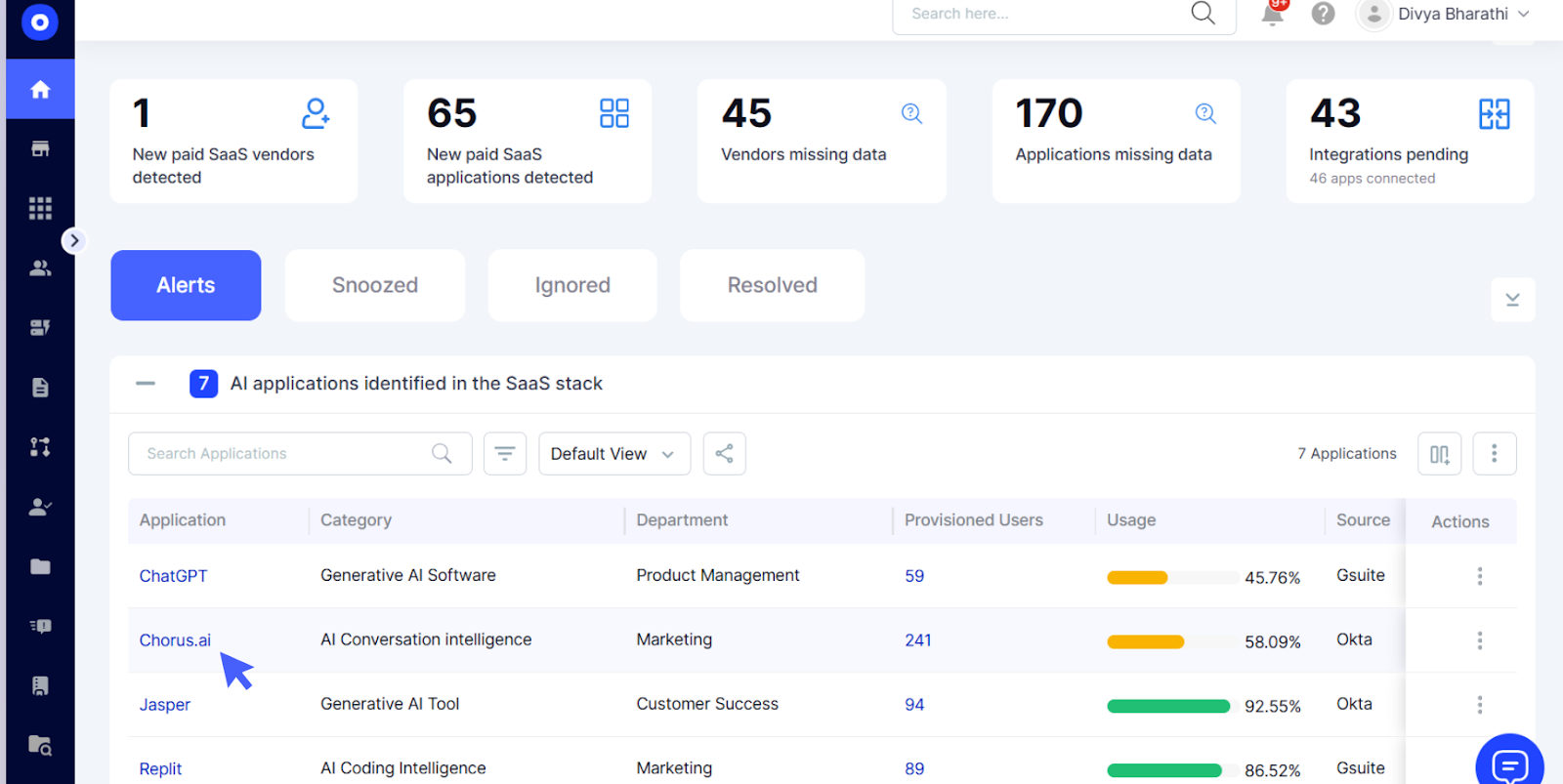

According to CloudEagle.ai’s latest IGA report, 60% of AI tools used inside enterprises operate outside IT’s visibility or control. This explosion of shadow AI is occurring simultaneously with the continued rise in adoption: over 80% of enterprises utilize AI today, yet fewer than 25% have a formal AI governance framework in place.

Let’s explore what these AI governance and show you how to make AI safe, compliant, and fully under control.

TL;DR

- An AI governance framework is a structured system of policies, controls, processes, and oversight mechanisms ensuring the safe, ethical, and compliant use of AI.

- Key elements include risk management, data governance, model monitoring, access controls, transparency, and accountability.

- AI governance frameworks matter because they reduce business risk, ensure compliance (GDPR, NIST, EU AI Act), prevent misuse, and control shadow AI.

- Best practices include clear policies, lifecycle governance, enforcing least privilege access, monitoring AI usage, centralizing approvals, and automating audits.

- CloudEagle.ai helps enterprises operationalize AI governance by discovering shadow AI, centralizing access, automating reviews, enforcing policies, and providing real-time monitoring.

1. What Is an AI Governance Framework?

An AI governance framework is a structured system of policies, controls, standards, and practices that guide how AI is designed, deployed, accessed, and monitored across enterprises.

It ensures that every AI system, whether it’s a simple predictive model or an autonomous, agentic AI workflow, is used safely, ethically, transparently, and in full compliance with regulations.

A complete AI governance framework answers essential operational questions such as:

- Who can access or deploy AI tools and models?

- Which data sources are allowed for training, fine-tuning, or inference?

- How do we prevent bias, hallucinations, and unfair outcomes?

- How do we track all AI usage—including shadow AI and embedded AI inside SaaS tools?

- How do we enforce role-based access, audit trails, and least-privilege controls?

- How do we govern agentic AI systems that act independently or trigger automated workflows?

- How do we maintain model safety, reliability, and documentation over time?

In simple terms, the AI governance framework acts as the organizational “rulebook” that keeps AI predictable, trustworthy, and under control as it scales.

2. Why AI Governance Frameworks Matter in Modern Enterprises?

AI governance is no longer optional.

Here’s why:

A. Preventing Data Leaks and Shadow AI: Shadow AI is a growing risk. Employees often use tools like ChatGPT or Gemini without approval, which can expose sensitive data and create untracked activity. A governance framework adds visibility, sets clear rules, and keeps AI usage safe and controlled.

B. Ensuring Compliance With New AI Regulations: Regulations like the EU AI Act, NIST AI RMF, GDPR, HIPAA, and SOX now require strict AI controls. Without a proper governance framework, staying compliant is extremely difficult, and non-compliance can lead to heavy fines and legal issues.

C. Reducing Business and Ethical Risks: AI can produce biased, inaccurate, or unpredictable results. It may even make decisions that are hard to explain. Governance frameworks reduce these risks through continuous monitoring, fairness checks, documentation, and quality reviews.

D. Enforcing Secure AI Access: Unrestricted access to AI tools, especially agentic AI, creates major security threats. Over-permissioned users can expose data or trigger unauthorized actions. Governance frameworks enforce strong identity controls so only the right users can access the right AI systems.

Reflecting on her experiences, Nidhi Jain, CEO and Founder of CloudEagle.ai, shares:

“I’ve seen it happen too many times, an employee changes roles, yet months later, they still have admin access to systems they no longer need. Manual access reviews are just too slow to catch these issues in time. By the time someone notices, privilege creep has already turned into a serious security risk.”

E. Improving Transparency and Accountability: AI governance makes usage clear and trackable. It shows:

- Who used AI?

- What data did they access?

- What decisions were made?

- Whether outputs were safe and fair?

This transparency is essential as AI becomes a core part of business operations.

3. Key Elements of an AI Governance Framework

A strong AI governance framework brings structure, safety, and accountability to every AI system used inside an organization. Whether you're deploying LLMs, embedded AI features, or agentic AI workflows, these core elements ensure AI remains safe, compliant, and aligned with enterprise standards.

a. AI Strategy & Policy Foundation

Every framework starts with clear strategy and policy guidelines. This includes deciding:

- Which AI tools are approved?

- How should employees use them?

- Where restrictions apply?

- Ethical expectations around AI use

- Rules for data handling and model development

Think of this as the “AI rulebook” that keeps everyone aligned from day one.

b. AI Risk Assessment

AI absolutely requires structured risk assessment. This means evaluating risks such as:

- Operational or performance risks

- Data security issues

- Model bias or fairness concerns

- Accuracy and quality problems

- Compliance gaps

- Model drift over time

For agentic AI systems, risk assessment also includes analyzing the level of autonomy and the potential impact of unintended actions.

c. Data Governance & Quality Controls

Data is the fuel for every AI system, so governance here is critical. A strong AI data governance framework includes:

- Controlled data access

- Full data lineage tracking

- Detailed documentation

- Validation and quality checks

- Encryption of sensitive information

- Data minimization and redaction policies

This ensures AI models only use secure, accurate, and properly governed data.

d. Model Governance & Lifecycle Oversight

Model governance covers every stage of a model’s life, including:

- Development and testing

- Validation and approval

- Deployment

- Ongoing monitoring

- Version control and documentation

- Retiring outdated or unsafe models

This keeps models accurate, compliant, and safe long after they go live.

e. Access Governance & Identity Controls

AI systems must be protected with strict access controls, including:

- Least privilege access

- Role-Based Access Control (RBAC)

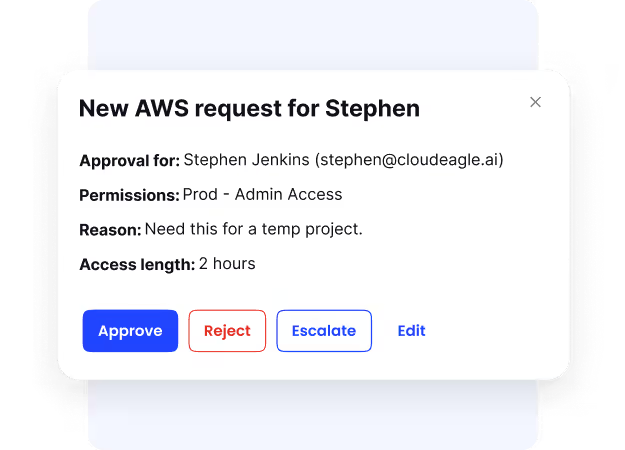

- Just-in-Time (JIT) access for sensitive tasks

- Privileged access guardrails

- Continuous access reviews

These controls are especially important for agentic AI, which can perform actions automatically.

Know how CloudEagle.ai helped Bloom & Wild streamline employee onboarding and offboarding.

f. Ethical and Responsible AI Guidelines

Every AI program needs clear ethical guidelines, often based on the 7 principles of responsible AI. These cover:

- Fairness

- Transparency

- Explainability

- Accountability

- Safety

- Human oversight

- Avoiding harm

These principles ensure AI is aligned with organizational values and public expectations.

g. Monitoring, Auditing, and Compliance

Finally, ongoing monitoring is essential. This includes:

- Real-time audit logs

- Model output monitoring

- Data flow tracking

- Automatic policy enforcement

- Anomaly and drift detection

- Incident logging

4. Policies & Controls Needed for a Strong AI Governance Framework

Enterprises need a mix of administrative, technical, and procedural controls.

Below are the must-have categories.

a. Administrative Controls

These set the rules and structure.

- AI acceptable use policies

- Data protection policies

- AI procurement guidelines

- Third-party AI risk assessments

- Governance committees & oversight roles

- Documentation requirements

b. Technical Controls

These enforce the rules automatically.

- Identity & access governance

- Continuous monitoring

- Privileged access restrictions

- Network controls

- Data encryption

- API security controls

- Model performance monitoring

- Audit logs

C. Procedural Controls

These ensure consistency.

- Model evaluation procedures

- Testing & validation workflows

- Bias detection processes

- Incident response playbooks

- Review and approval processes

D. Specialized Controls for Agentic AI

With agentic AI on the rise, frameworks must include:

- Guardrails for autonomous actions

- Agent permission scopes

- Human-in-the-loop oversight

- Time-bound access

- Safety sandboxing

These help govern advanced agentic AI governance frameworks.

5. Best Tools for Implementing AI Governance Frameworks

Enterprises often combine multiple solutions. Here are the categories and top tools used.

6. AI Governance Best Practices for IT, Security & Compliance Teams

Implementing AI governance effectively requires strong processes. Here’s how you can build it:

a. Start With Policy Before Technology

AI governance must begin with clear policies that define which AI tools are approved, what usage is allowed, and who can request access. Setting rules first prevents shadow AI, keeps teams aligned, and ensures technology choices support your risk tolerance instead of creating new vulnerabilities.

b. Use Centralized Access Management

To manage AI safely, access must be centralized. A unified system makes it easier to onboard users, remove access when people leave, enforce least-privilege rules, and monitor who is using which AI tools. This avoids scattered permissions that often lead to security gaps.

c. Discover All AI and SaaS Apps

You cannot govern what you cannot see. Automated discovery helps identify every AI and SaaS tool in use, including shadow AI and embedded AI features inside apps. With enterprises using 300+ SaaS tools on average, continuous scanning is essential to stay fully aware of your AI footprint.

d. Apply Least Privilege Access Across the Board

AI tools, especially agentic or high-risk systems, should only be accessible to users who truly need them. Use RBAC, just-in-time access, temporary permissions, and automatic revocation to minimize over-privileged accounts and reduce the risk of misuse.

e. Automate Access Reviews

Manual access reviews often miss outdated or risky permissions. Automating access reviews ensures that managers regularly confirm who should keep access, while AI-driven anomaly detection highlights unusual or unnecessary permissions. This reduces breach risk significantly.

Discover how Dezerv automated its app access review process with CloudEagle.ai.

f. Monitor for Abnormal AI Behavior

AI misuse often shows up as unusual behavior, large data downloads, strange prompts, attempts to access restricted datasets, or abnormal API usage. Real-time monitoring helps security teams catch insider threats or misuse before it cause damage.

g. Enforce Data Security Controls

AI systems rely heavily on sensitive data. Use encryption, tokenization, masking, and strict access rules to protect training data and user inputs. These controls ensure compliance with ISO 27001, SOC 2, GDPR, HIPAA, and other regulations while preventing exposure of sensitive information.

h. Use Real-Time Governance Tools

AI changes too quickly for annual audits to be effective. Real-time governance platforms like CloudEagle.ai provide continuous discovery, access monitoring, compliance alerts, and policy enforcement across all AI and SaaS tools, helping enterprises remain secure always.

7. Why CloudEagle.ai is Essential for AI Governance?

AI governance requires much more than model risk controls.

You need to govern:

- Who uses AI?

- How do they use it?

- What data do they access?

- Whether usage align with policy?

- Whether access remains valid over time?

CloudEagle.ai solves the identity and access side of AI governance—where most real-world AI breaches occur.

Here’s how CloudEagle.ai helps:

a. Enforcing Strong Identity & Access Controls

Most AI governance frameworks focus on risk assessment, validation, or documentation. But the highest-risk questions are identity-related:

- Who is using AI tools?

- What data are they feeding into models?

- Do they still need that access?

- Are they bypassing IT using shadow AI?

CloudEagle.ai governs the human and machine access layer, which is where most AI data leaks and security failures occur.

b. Providing Full Visibility Into All AI Tools (Including Shadow AI)

AI usage is exploding across SaaS tools—often without IT approval. CloudEagle.ai helps organizations:

- Automatically discover all AI tools in use

- Identify embedded AI inside SaaS applications

- Detect unauthorized and high-risk AI usage

This visibility is essential for any AI governance framework or AI data governance strategy.

c. Enforcing Least-Privilege Access Across All AI Systems

CloudEagle.ai ensures users only access the AI tools and data they need by enforcing:

- Role-Based Access Control (RBAC): Access is automatically assigned based on job role, so employees only use the AI tools and data relevant to their responsibilities. This eliminates manual mistakes and keeps permissions consistent across teams.

- Just-in-Time Access (JIT): Instead of permanent high-level access, users receive temporary privileges only when needed. These expire automatically, reducing the time window in which sensitive data or AI actions could be misused.

- Privileged Access Controls: High-risk AI systems and agentic AI tools are tightly restricted. Only approved users can access them, and every privileged action is tracked for accountability and compliance.

- Continuous Access Reviews: CloudEagle.ai regularly checks whether users still need the permissions they have. When roles change or tools are no longer needed, access is revoked automatically to prevent lingering or unauthorized accounts.

This protects enterprises from over-permissioning—the #1 cause of AI-driven data exposure.

d. Automating Provisioning & Deprovisioning for AI Tools

When employees join, switch roles, or leave, CloudEagle.ai automatically adjusts their AI access.

This prevents:

- Orphaned AI accounts,

- Lingering API keys,

- Unauthorized agent actions,

- Compliance violations.

This aligns with the key elements of an AI governance framework, especially access governance and lifecycle controls.

e. Ensuring AI Usage Aligns With Organizational Policies

CloudEagle.ai monitors whether users follow AI usage policies, such as:

- restrictions on entering sensitive data into LLMs

- limits on agentic AI actions

- approved vs unapproved AI tools

- industry-specific compliance requirements

This transforms AI governance from a static policy document into an enforceable operational system.

f.Maintaining Continuous, Auditable Logs for Compliance

To comply with SOC 2, GDPR, ISO 27001, and the EU AI Act, CloudEagle.ai maintains detailed logs of:

- Who accessed AI tools?

- When and why did they access them?

- What data did they interact with?

- Which permissions changed over time?

This is crucial for proving compliance in AI audits.

“Compliance is not security. But security must always be compliant.” ― Shamla Naidoo, Head of Cloud Strategy, Netskope

g. Controlling the Risks of Agentic & Autonomous AI Systems

As enterprises adopt agentic AI workflows, AI agents can:

- autonomously execute tasks

- modify data

- trigger workflows

- access sensitive systems

CloudEagle.ai protects these environments by:

- limiting agent access

- enforcing JIT privileges

- monitoring agent behavior

- flagging unsafe or unauthorized actions

This is essential for agentic AI governance frameworks.

h. Ensuring AI Governance Scales With the Enterprise

Governance isn’t a one-time setup. It must scale as:

- New AI tools emerge

- regulations evolve

- agentic AI becomes more common

- SaaS environments grow

CloudEagle.ai continuously monitors, updates, and enforces access controls so governance remains effective—even at scale.

With 20+ years in IT, Jeremy Boerger explores SaaS governance, access management, and breaking silos. Discover why building resilient, data-driven IT organizations is about empowering people, not just implementing tools.

8. Conclusion

AI is transforming enterprises, but without proper SaaS governance, it creates risks like shadow AI, biased models, compliance failures, and excessive access. Strong frameworks protect data, reduce risks, ensure compliance, boost transparency, control access, enforce policies, maintain audit readiness, and enable safe innovation.

Modern AI innovation demands equally modern governance.

CloudEagle.ai operationalizes governance by discovering AI/SaaS usage across 500+ applications, automating access reviews, enforcing least privilege, centralizing contract management, and providing real-time compliance reporting; all from a single platform.

Book a demo to see how CloudEagle.ai brings governance, compliance, and security together at scale.

9. FAQs

1. What are the core pillars of AI governance?

AI governance rests on seven core pillars: ethical standards for fairness, risk management to spot threats, data governance for quality, model governance for performance, access governance for security, monitoring and compliance for oversight, and transparency with accountability to build trust.

2. What are the 4 P's of governance in an AI context?

The 4 P's are simple: People for roles and accountability, Processes for workflows and approvals, Policies for AI rules and standards, and Platforms for governance tools and oversight.

3. What is the 30% rule in AI governance?

The 30% rule says AI shouldn't control more than 30% of critical business decisions alone; humans must oversee the rest to keep control and reduce risks.

4. What are the 7 principles of responsible AI?

Responsible AI follows seven principles: fairness to cut bias, transparency for clear decisions, accountability for results, privacy protection, security against attacks, reliability for steady work, and human oversight for key calls.

5. What are the 7 C's of AI governance?

The 7 C's cover Clarity for rules, Control for access, Compliance for laws, Consistency for even use, Confidence in results, Continuity for ongoing checks, and Culture for responsible habits.

%201.svg)

.avif)

.avif)

.avif)

.png)