HIPAA Compliance Checklist for 2025

As AI adoption accelerates across every industry, companies are rushing to integrate LLMs, autonomous agents, embedded AI features, and generative AI tools into daily workflows. But behind the innovation lies a growing problem: AI is scaling faster than governance.

As LLMs, AI agents, and embedded tools become part of daily workflows, so do new risks. Nearly 71% of enterprises are already using AI without meeting core regulations like SOC 2, GDPR, or the EU AI Act—often without realizing it.

This AI compliance checklist breaks it all down. From access controls to data privacy, it helps you stay compliant, reduce risk, and avoid costly surprises. Whether you're managing AI vendors, automating reviews, or building an audit-ready system, this guide is your starting point.

TL;DR

- AI compliance is crucial for preventing data leaks, audit failures, and legal risks as regulations become increasingly stringent across SOC 2, GDPR, and the EU AI Act.

- SOC 2 compliance for AI necessitates stringent access controls, logging, encryption, and vendor risk assessments to safeguard sensitive data and ensure secure AI usage.

- GDPR mandates transparency, user rights, consent, and data minimization for any AI processing personal data.

- The EU AI Act introduces risk-based requirements, including documentation, oversight, and governance for high-risk and general-purpose AI systems.

- CloudEagle.ai helps automate AI compliance through real-time access reviews, policy enforcement, AI usage monitoring, and audit-ready reporting.

AI Compliance Checklist: Everything You Need for SOC 2, GDPR, and the EU AI Act

A comprehensive AI compliance checklist should provide your team with full control over how AI tools collect data, make decisions, and interact with users. At a minimum, it must include:

Why AI Compliance Matters for Modern Enterprises?

AI introduces new risks that traditional security and compliance tools were never built to handle. Without the right controls, AI can expose data, act unpredictably, or operate outside IT visibility.

According to CloudEagle.ai’s IGA report, 60% of AI and SaaS apps operate outside IT visibility.

Key Risks AI Creates

1. LLMs Can Leak Confidential Data: Employees may paste sensitive data into AI tools, and those tools can unintentionally reveal or reuse that information elsewhere.

2. Shadow AI Tools Bypass Governance: Unapproved AI extensions or apps run silently, creating security blind spots that IT cannot track or manage.

3. AI Agents Act Without Oversight: Agentic AI can change settings, access data, or trigger workflows automatically, making errors harder to catch.

4. Embedded AI Features Lack Visibility: Apps now include hidden AI capabilities that IT teams never approved, increasing risk without warning.

5. Regulators Demand Transparency & Documentation: Laws like GDPR and the EU AI Act mandate explainability, data protection, and clear audit trails for all AI-driven decisions.

What’s at Stake for Organizations?

1. GDPR Fines & EU AI Act Penalties: Incorrect AI use can result in multimillion-dollar fines and legal consequences.

2. SOC 2 Audit Failures: Missing documentation, unclear access logs, or unmanaged AI systems can cause companies to fail compliance audits.

3. Data Exposure & Privacy Incidents: AI tools can easily expose PII or sensitive business information if not governed properly.

4. Loss of Customer Trust: Customers lose confidence if AI mishandles their data or behaves unpredictably.

5. Business & Operational Disruptions: AI errors or unauthorized actions can break workflows, corrupt data, or cause downtime.

6. Legal Liability for AI-driven Harm: Organizations can be held responsible for decisions or actions taken by their AI systems.

Shamla Naidoo, Head of Cloud Strategy, Netskope, says:

“Compliance is not security. But security must always be compliant.”

SOC 2 AI Compliance Checklist: Security, Availability, Integrity & Confidentiality

SOC 2 is built around five Trust Service Criteria: Security, Availability, Processing Integrity, Confidentiality, and Privacy.

Below is a SOC 2-ready compliance checklist tailored specifically for AI systems.

1. AI Data Security Controls

SOC 2 requires strict controls around data access, storage, and processing.

- Encrypt data used for AI training

- Encrypt prompts, inputs, and outputs

- Monitor sensitive data input into AI models

- Enforce least-privilege permissions

- Detect unauthorized access or shadow AI tools

- Apply multi-factor authentication

- Establish secure API pathways for AI integrations

2. AI Access Governance

SOC 2 auditors now expect identity governance for AI:

- Automated provisioning/deprovisioning for all AI tools

- RBAC for AI applications and AI agents

- JIT access for high-risk AI functions

- AI tool access reviews every 30/60/90 days

- Audit logging for all AI usage

- No orphaned AI accounts

CloudEagle.ai is one of the few platforms that automates all of the above.

3. Logging & Monitoring Requirements

SOC 2 requires audit trails for every action.

- Log all AI prompts

- Log AI agent actions

- Log model output transformations

- Monitor real-time AI usage

- Track anomalies, data exfiltration attempts, or risky behavior

4. Vendor Compliance for AI Providers

SOC 2 requires analyzing third-party vendors.

- Confirm AI vendors follow SOC 2 or equivalent frameworks

- Evaluate AI data retention policies

- Validate vendor security documentation (SOC 2, ISO 27001, DPAs)

- Assess where the AI model data is stored and processed

- Maintain vendor compliance records

A full AI vendor compliance checklist (included later) covers this in more detail.

5. Risk Assessments & Internal Controls

- Conduct AI risk assessments quarterly

- Conduct threat modeling for AI systems

- Maintain internal AI security policies

- Track remediation plans

- Perform incident response testing

6. Privacy & Confidentiality Controls

- Monitor personal data sent to AI systems

- Restrict PII via prompt filters

- Automatically flag and block sensitive categories

- Ensure AI outputs do not leak private data

GDPR AI Compliance Checklist: Data Rights, Consent, and AI Transparency

GDPR applies to all AI systems that process personal data.

The GDPR checklist includes:

1. Lawful Basis for AI Processing

- Identify the legal basis (consent, legitimate interest, contract)

- Provide clear disclosures about AI processing

- Document why AI is needed

- Maintain processing records

2. Data Minimization

- Limit training data to necessary fields

- Avoid using PII in prompts

- Strip identifiers before processing

- Automatically redact unneeded personal data

3. User Consent & Transparency

- Provide clear descriptions of automated decision-making

- Explain risks, purpose, and impact

- Offer opt-out options

- Notify users when AI is used

4. Rights of the Data Subject

GDPR requires mechanisms for:

- Right to access

- Right to rectification

- Right to erasure

- Right to restrict processing

- Right to object

- Right to explanation of automated decisions

AI outputs must be retrievable and explainable.

5. Data Retention & Deletion

- Define retention periods for prompts, logs, and training data

- Ensure AI vendors delete data on request

- Automate deletion workflows

6. DPIAs (Data Protection Impact Assessments)

GDPR mandates DPIAs for AI systems that:

- Use sensitive data

- Affect user rights

- Make automated decisions

- Process large-scale personal data

Checklist:

- Complete a DPIA before deploying any AI system

- Update annually or after major model changes

- Document all risks and mitigations

EU AI Act Compliance Checklist: High-Risk Systems, Documentation & Risk Controls

The EU AI Act introduces the world’s strictest AI regulations. If you operate in the EU, or process EU user data, you must comply.

Below is your full EU AI Act compliance checklist.

1. Classify Your AI System

The Act defines AI systems as:

- Unacceptable Risk: Completely banned.

- High Risk: Healthcare, finance, public services, biometric ID, hiring, legal decisions.

- Limited Risk: LLMs, chatbots, productivity tools.

- Minimal Risk: Games, entertainment, UI personalization.

Your first step:

- Determine your AI risk category

- Document classification decisions

2. High-Risk System Requirements

High-risk AI must meet the following:

Risk Management System

- Continuous risk monitoring

- Testing

- Evaluation

- Mitigation

Data Quality & Governance

- Training data documentation

- Bias evaluation

- Synthetic data risk controls

Technical Documentation

- Model architecture

- Data sources

- Explanation of logic

Logging & Traceability

- Prompts

- Outputs

- Model changes

- Agent behavior

Human Oversight

- Ability to override or shut down AI

- Review of automated decisions

Accuracy, Robustness & Security Controls

- Testing standards

- Validation

- Penetration testing

- Adversarial attack resistance

3. General-Purpose AI (GPAI) Requirements

LLMs and foundation models must provide:

- Model capabilities and limitations

- Training dataset summaries

- Cybersecurity documentation

- Risk reports

- Model update logs

4. Transparency Obligations

- Clearly label AI-generated content

- Disclose the use of chatbots

- Inform users about automated decisions

5. Provider & Deployers Requirements

- Register high-risk AI in the EU database

- Maintain governance documentation

- Monitor AI lifecycle changes

- Keep logs for 10 years

AI-Driven Compliance Checklist Automation: How Companies Reduce Manual Work?

Many companies still manage AI compliance by hand, using spreadsheets, email threads, Slack messages, and manual reviews. It’s slow, messy, and easy to get wrong. This outdated approach often leads to mistakes, missed steps, and failed audits.

Modern enterprises use AI-driven compliance automation:

- Automatic access reviews

- AI usage monitoring

- Shadow AI detection

- Automated documentation generation

- Continuous risk scoring

- Real-time policy enforcement

- Centralized evidence repository

- Automated onboarding/offboarding compliance

This reduces compliance overhead by 60–80%.

AI Vendor Data Privacy & Compliance Checklist

AI vendors must meet strict standards. Evaluate every AI vendor using this checklist:

Vendor Security Controls

- SOC 2 Type 2

- ISO 27001

- GDPR compliance

- EU AI Act preparedness

- Zero retention or limited data retention

- Secure encryption pipelines

- Data isolation

Vendor Data Handling Policies

- Will training data include your inputs?

- Are prompts stored? For how long?

- Can user data be deleted instantly?

- What metadata is logged?

- Where is data stored (regions/geographies)?

Vendor AI Model Controls

- Does the vendor provide model documentation?

- Are model vulnerabilities disclosed?

- Does the vendor support custom guardrails?

- Does the vendor support red-teaming or testing?

Vendor Risk Reporting

- Incident response SLAs

- Breach notification timelines

- Vulnerability reporting

- Access logging

How CloudEagle.ai Helps Build a Continuous AI Compliance Process Across IT, Security & Legal?

CloudEagle.ai is a leading Identity & Access Governance platform that helps enterprises stay compliant every day, not just during SOC 2, GDPR, or EU AI Act audits.

It replaces manual tracking and scattered tools with automated controls across all apps, users, and AI workflows, giving IT, Security, and Compliance teams one place to manage everything.

Here’s what CloudEagle.ai provides:

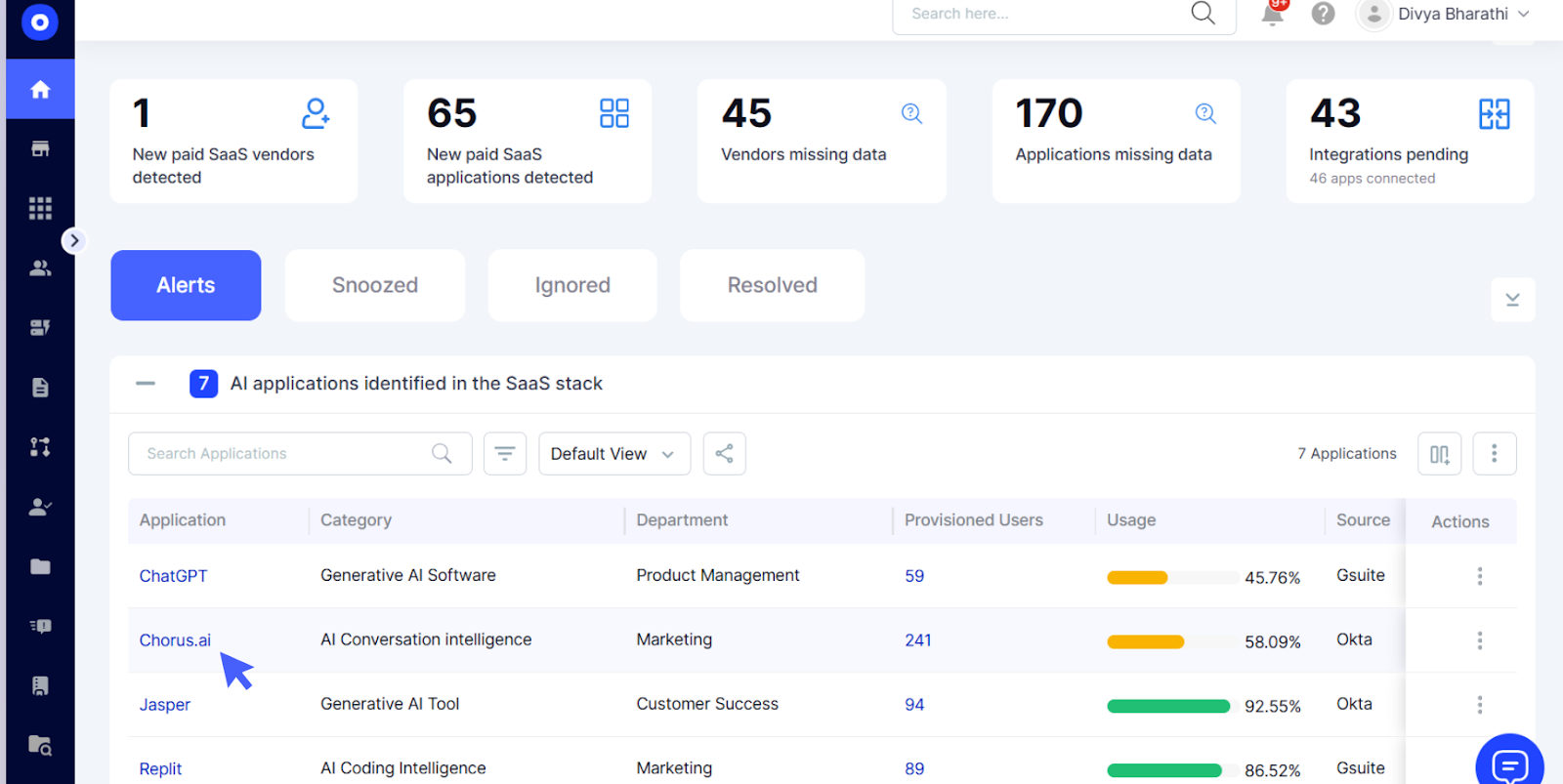

1. AI Usage Visibility & Monitoring

CloudEagle.ai makes it easy to see how AI is being used across your company. It shows who’s using AI, which tools they’re using, what data they’re sharing, and where things might be risky.

This includes hidden tools like shadow AI that IT usually can’t see. With CloudEagle.ai, security teams get a clear view of all AI activity. It spots unusual behavior, risky prompts, and unapproved tools, so you can fix problems early and stay compliant.

2. Automated Access Reviews

CloudEagle.ai automates the entire access review process, replacing weeks of manual effort with a few clicks. Instead of exporting spreadsheets and chasing managers for confirmation, reviewers see a clean, automated dashboard showing who should keep access, who should lose it, and where permissions exceed policy.

Discover how Dezerv automated its app access review process with CloudEagle.ai.

3. Automated Onboarding & Offboarding Compliance

Provisioning and deprovisioning are critical for AI and SaaS compliance. CloudEagle.ai automates user access from day one and ensures employees lose access immediately upon departure. Contractors and vendors receive time-bound access that expires automatically.

This eliminates orphaned accounts, reduces insider threat risk, and ensures every identity within the organization remains governed and compliant. It also supports SOC 2, ISO 27001, and GDPR requirements for rapid deprovisioning.

Know how CloudEagle.ai helped Bloom & Wild streamline employee onboarding and offboarding.

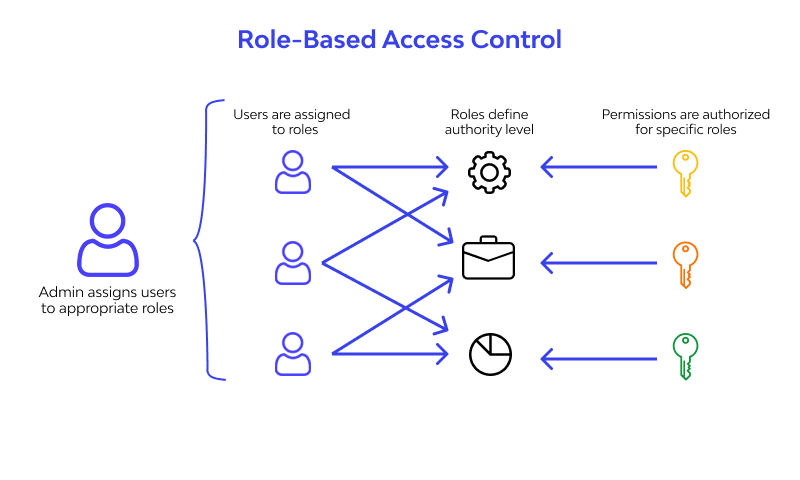

4. Role-Based Access Control (RBAC)

CloudEagle.ai makes sure every employee only has access to what they need; based on their role. When someone changes teams or gets a new job, their permissions update automatically. No tickets, no delays, no manual fixes.

This helps prevent people from keeping access they no longer need (known as privilege creep), cuts down on risk, and keeps access clean and simple across the company. With ongoing monitoring, it ensures everyone always has the right access—nothing more, nothing less.

Nidhi Jain, CEO and Founder of CloudEagle.ai, shared from her experience:

“I’ve seen it happen too many times—an employee changes roles, yet months later, they still have admin access to systems they no longer need. Manual access reviews are just too slow to catch these issues in time. By the time someone notices, privilege creep has already turned into a serious security risk.”

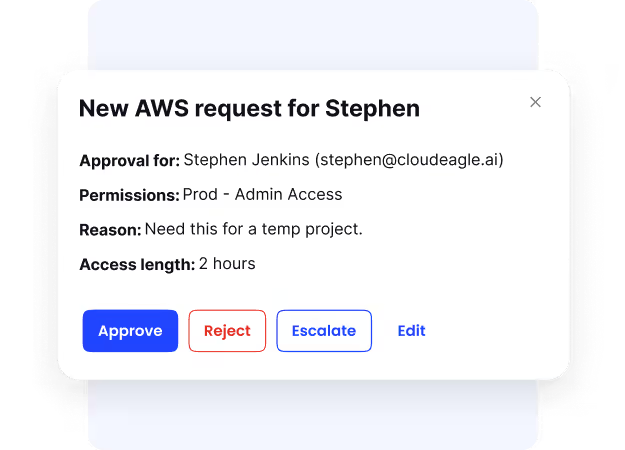

5. Just-In-Time (JIT) Access

JIT access helps organizations eliminate standing privileges; one of the biggest risks in modern compliance. CloudEagle.ai allows users to request temporary elevated access only when needed. Once the task is complete or the time window expires, access automatically disappears.

This reduces attack surface dramatically. Even if credentials are compromised or misused, attackers have no permanent elevated access to exploit. It also ensures audit-readiness, as every JIT access request is logged, justified, and tied to a specific purpose.

6. Privileged Access Governance

CloudEagle.ai brings powerful access governance to high-risk accounts; including admin roles, power users, AI agents, and other privileged access types. It tracks every action taken, flags suspicious activity, and applies strict controls to prevent unauthorized or accidental misuse. This ensures sensitive access is always monitored, managed, and secure.

7. Automated Compliance Reporting

CloudEagle.ai creates all the compliance reports you need—automatically. From SOC 2 evidence and GDPR logs to EU AI Act documents and access review records, everything is generated without manual work.

This saves weeks of audit prep. Instead of digging through different tools and systems, you get clean, organized reports that are easy to export and share. For companies managing lots of SaaS tools, this makes compliance much easier and faster.

8. Self-Service App Catalog

CloudEagle.ai offers a centralized, compliant app catalog where employees can request AI or SaaS tools through automated workflows. IT, Security, and Legal approvals are built in, ensuring tools are reviewed, documented, and aligned with policy before access is granted.

Check out this discussion where Karl Haviland, Founder & CEO of Haviland Software, shares his insights on SaaS governance, AI, and cloud-first strategies—and how to scale tech innovation with confidence and clarity.

Conclusion

AI compliance is no longer optional; it’s essential for any company using AI tools, LLMs, or agents. With stricter regulations like SOC 2, GDPR, and the EU AI Act, organizations must have clear controls over how AI operates, what data it accesses, and who can use it.

Without that, the risk of data breaches, audit failures, and costly fines increases dramatically. A well-structured AI compliance checklist keeps you organized, ensuring vendor reviews, usage monitoring, and audit trails are always in place.

But with AI embedded across every app and team, manual efforts simply can’t scale.

CloudEagle.ai simplifies AI compliance with real-time visibility, automated access reviews, policy enforcement, and vendor risk tracking; all in one platform.

Want a secure and compliant AI environment? Schedule a demo with CloudEagle.ai today.

FAQs

1. Why is AI compliance important?

AI compliance ensures AI systems are safe, ethical, lawful, and secure. It prevents data misuse, fines, and operational risks.

2. What should be included in an AI compliance checklist?

Data governance, access controls, transparency requirements, prompt monitoring, vendor risk checks, documentation, and continuous operations.

3. What regulations apply to AI systems in 2025?

SOC 2, GDPR, EU AI Act, ISO 42001, NIST AI RMF, HIPAA (for healthcare AI), and industry-specific guidelines.

4. What tools can automate AI compliance tasks?

CloudEagle.ai, OneTrust, Securiti, Microsoft Purview, and similar platforms that automate access reviews, monitoring, and documentation.

5. How often should AI compliance be reviewed?

Quarterly for most controls; real-time continuous monitoring is recommended for AI usage, access, and risk scoring.

%201.svg)

.avif)

.avif)

.avif)

.png)