HIPAA Compliance Checklist for 2025

AI adoption inside enterprises is accelerating faster than most organizations can control. Teams are experimenting with GenAI tools, embedding AI features into core SaaS platforms, and deploying models for productivity, analytics, and decision-making.

At the same time, risks are rising just as quickly, from data leakage and hallucinations to regulatory exposure and reputational damage.

This is why building a structured AI governance framework is no longer optional. But governance doesn’t need to slow innovation or take months to implement.

This guide walks you through a practical, 30-day roadmap to launch AI governance in your organization, covering visibility, risk control, compliance, and responsible AI adoption, without creating unnecessary bureaucracy.

By the end, you’ll have a working AI governance framework your teams can actually use.

TL;DR

- AI governance helps organizations manage AI risks, compliance, and accountability

- You can build a functional AI governance framework in 30 days using a structured weekly plan

- Start by auditing AI usage, classifying risks, and defining ownership

- Create policies, controls, and approval workflows from week two onwards

- End the 30 days with monitoring systems, documentation, and a clear change-management plan

1. Why AI Governance Matters Today?

AI is no longer confined to centralized data science teams. It now shows up everywhere — inside SaaS tools, browser extensions, copilots, APIs, and employee-built workflows. This decentralized adoption is exactly what makes AI powerful, and dangerous.

Without a defined AI governance process, organizations struggle to answer basic questions:

- Which AI tools are employees using?

- What data is being shared with models?

- Who owns risk decisions?

- Can we explain or audit AI-driven outcomes?

At the same time, regulatory and internal pressure is mounting. Frameworks like the EU AI Act, internal enterprise AI policies, and industry compliance expectations are pushing organizations to prove control, transparency, and accountability.

An effective AI governance framework helps enterprises innovate with confidence — not fear.

What AI Governance Actually Includes

AI governance is not just an ethics document or a compliance checkbox. It’s an operating model that defines how AI is approved, used, monitored, and retired.

A practical AI governance framework typically includes:

- Clear AI policies and acceptable-use guidelines

- Risk classification for AI use cases and vendors

- Defined roles and accountability (owners, reviewers, risk approvers)

- Controls for data handling, access, and model behavior

- Monitoring, logging, and audit readiness

When done right, governance becomes an enabler, not a blocker.

Signs Your Organization Needs AI Governance

Many enterprises already have AI governance problems, they just don’t call them that.

Common red flags include:

- Employees using AI tools without IT or security approval (shadow AI)

- No consistency in prompts, models, or usage guidelines

- Vendor-provided AI features with unclear data retention or training policies

- No audit trail explaining how AI outputs influenced decisions

If any of these sound familiar, governance is overdue.

2. The 30-Day Plan: Build Your AI Governance Framework Fast

The fastest way to build AI governance is to approach it in weekly phases, each with a clear goal and outcome. This keeps momentum high and avoids overengineering early.

Week 1 - Discover & Assess

Governance starts with visibility. You can’t govern what you can’t see. In the first week, focus on discovering how AI is actually being used across your organization.

Key actions:

- Inventory all AI tools, copilots, browser extensions, APIs, and embedded AI features

- Identify AI use cases across departments (IT, marketing, sales, HR, finance)

- Classify each use case by risk level: low, medium, or high

- Map data flows, including sensitive data exposure and third-party vendors

This step often reveals far more AI usage than expected, especially tools adopted outside formal procurement.

The output of week one should be a clear AI usage map and an initial AI risk baseline.

Week 2 - Define Roles, Policies & Guardrails

Once visibility is established, governance shifts from discovery to decision-making. Start by assigning ownership. AI governance cannot live in a vacuum.

Actions for week two:

- Establish an AI governance committee or working group

- Assign owners for AI tools, policies, and risk approvals

- Draft core AI governance policies, including:

- Acceptable AI use

- Data handling and privacy

- Human oversight and accountability

- Transparency and logging requirements

- Define approval workflows for introducing new AI tools or features

At this stage, policies should be practical and enforceable, not theoretical. The goal is to guide behavior, not overwhelm teams.

Week 3 - Implement Controls & Workflows

Policies without controls don’t work. Week three is where governance becomes operational. This is where organizations translate intent into systems and workflows.

Key focus areas:

- Integrate AI risk scoring into procurement and vendor review processes

- Establish validation requirements for higher-risk models and use cases

- Define access controls and permission structures for AI tools

- Create prompt guidelines and review checkpoints for sensitive workflows

This step ensures AI decisions are not only approved, but repeatable, explainable, and enforceable.

Week 4 - Monitoring, Documentation & Rollout

The final week is about sustainability. Governance should continue working after the initial rollout.

Actions to complete the framework:

- Enable AI usage monitoring and drift detection where applicable

- Set up centralized audit logs for AI decisions and access

- Document the full AI governance framework in a single playbook

- Train employees and run change-management communication

- Establish a quarterly review cadence for policies, risks, and controls

By the end of week four, governance is no longer a project, it’s a process.

3. How CloudEagle.ai Helps With AI Governance

As AI adoption surges across SaaS ecosystems, CloudEagle.ai provides a robust, automated framework to help enterprises govern access, control risk, ensure compliance, and reduce SaaS waste.

1. Centralized Discovery of AI & SaaS Tools

- Real-time AI tool detection: Detects apps users log into (e.g., ChatGPT, Midjourney, DeepSeek) even if they’re not approved or connected to the SSO/IDP.

- Cross-verification with finance systems: Matches login data with expense records to flag Shadow AI usage and unauthorized spend.

- Role-based usage tracking: Identifies which departments and users are adopting unapproved AI tools and the frequency of access.

Business Value: Exposes Shadow IT and AI usage across departments, especially in Sales, Marketing, and Engineering, where governance gaps are common.

2. AI Risk Scoring & Vendor Intelligence

- Automated risk scoring for AI vendors based on data sensitivity, integration level, access permissions, and compliance readiness.

- Dynamic risk levels tied to real-time usage patterns and org-wide policy rules.

- Integration with CLMs (e.g., Ironclad, Coupa) to assess contract risk and policy alignment for AI vendors.

Business Value: Empowers IT and security teams to quantify AI risk, streamline approvals, and prepare for audits with data-backed risk metrics.

3. Policy Management and Enforcement

- AI-specific policies: Create and enforce usage policies for generative AI tools across the org.

- Version-controlled governance: Track changes to AI usage policies over time with audit-ready logs.

- User-based alerts and enforcement: Auto-notifies users or blocks access to tools that violate policies via Slack or email.

Business Value: Keeps governance policies up to date and ensures consistent enforcement at scale.

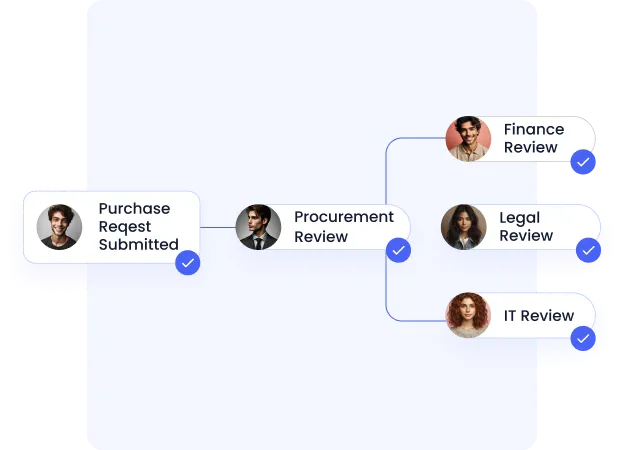

4. Approval Workflows for AI Access & Procurement

- AI procurement guardrails: Ensure new AI tools go through compliance and risk checks before purchase.

- Access request workflows: Users request access through an employee-facing app catalog with built-in approval routing based on role or risk level.

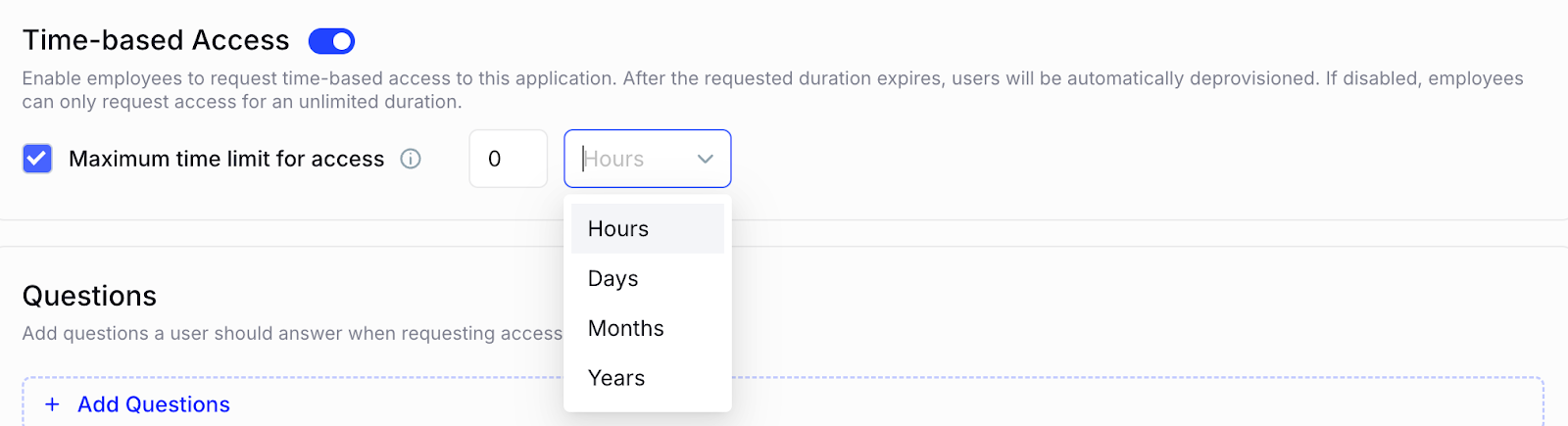

- Time-based access controls: Temporary access that auto-expires for contractors, interns, or project-based users.

Business Value: Centralizes and automates the intake-to-access lifecycle for AI tools, reducing risk and manual effort.

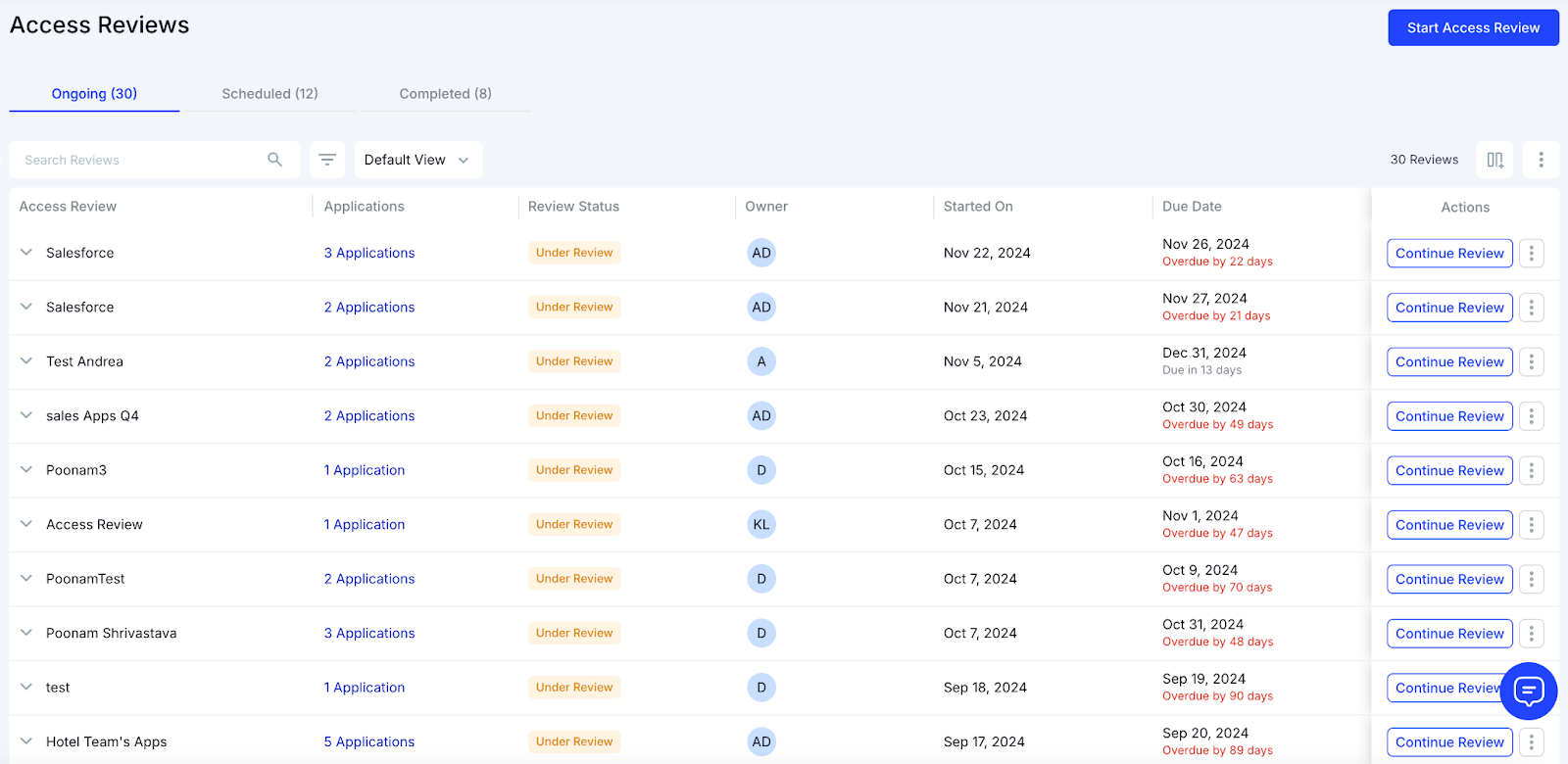

5. Continuous Access Monitoring & Risk Alerts

- Continuous access reviews using AI-driven workflows, not just quarterly audits.

- Privilege creep detection: Flags users with excessive or lingering access rights and recommends auto-revocation.

- Automated deprovisioning: Identifies employees who haven’t used a tool and reclaims access/licenses without manual IT tickets.

Business Value: Prevents overprivileged access, ensures compliance with SOC2/GDPR, and minimizes insider threat risks.

6. Shadow AI & SaaS Reporting

- Executive dashboards to track Shadow AI growth, risk levels by department, and tool-specific usage.

- Scorecards showing percentage of sanctioned vs. unsanctioned apps, high-risk vendors, and policy violations.

- Pre-built audit exports for compliance teams preparing for SOC2, ISO, or internal reviews.

Business Value: Delivers real-time compliance reporting and dramatically reduces audit prep time.

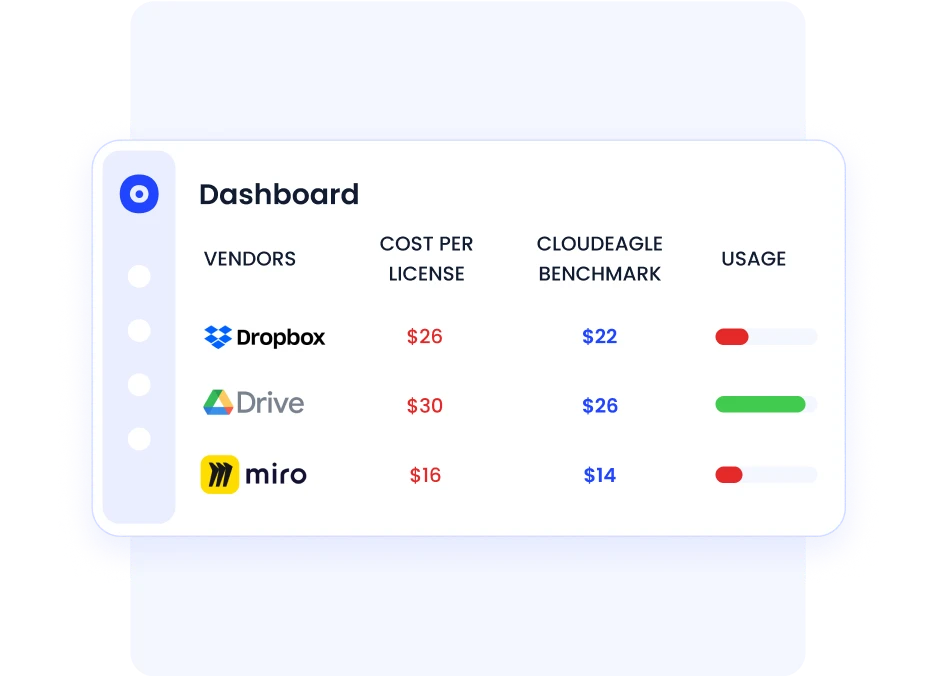

7. AI Spend & License Optimization

- License harvesting: Automatically reclaims unused AI tool licenses (e.g., Jasper, Grammarly) and reallocates them.

- Feature-level usage tracking: Tracks which AI features are used to right-size SKUs during renewal.

- Duplicate tool detection: Surfaces overlapping AI tools and flags redundancy across teams.

Business Value: Saves 10–30% of AI/SaaS spend by eliminating waste and optimizing contracts.

8. Deep Integrations & Zero-Touch Automation

- 500+ integrations with SSO, HRIS, finance, and SaaS apps to automate data syncing and governance.

- Works outside the IDP: Can detect and govern AI tools that are not connected to Okta, SailPoint, or other IAM systems.

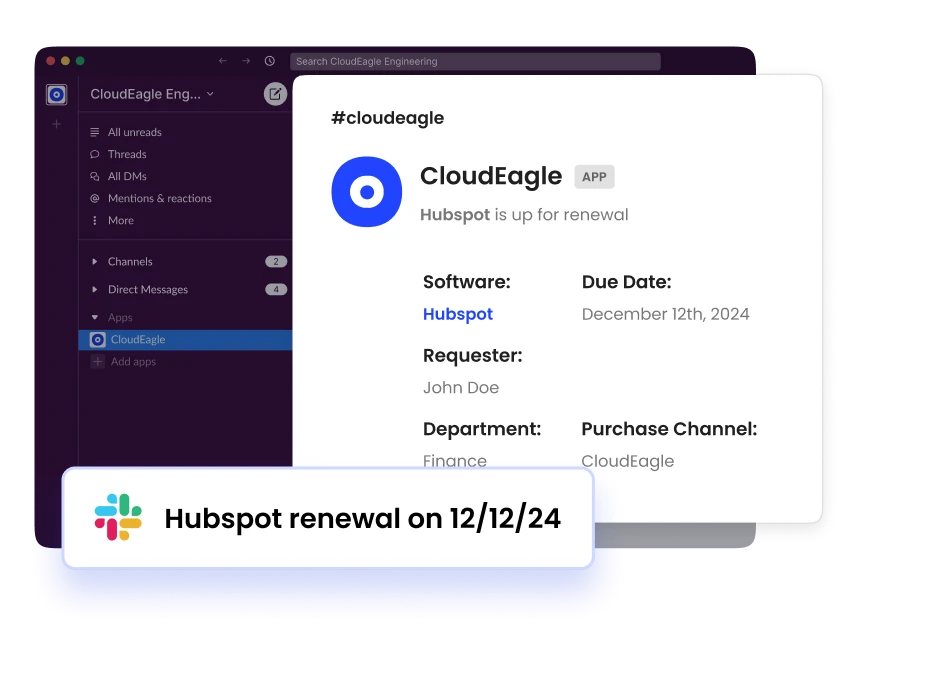

- Slack-native workflows: Teams can request, approve, or revoke access directly within Slack, no need for tickets or email chains.

Business Value: Ensures scalable governance across all SaaS and AI tools, not just those managed through traditional identity tools.

4. Conclusion

Building an AI governance framework does not require months of planning or heavy bureaucracy. In just 30 days, organizations can move from uncontrolled AI adoption to a structured, enforceable governance model.

By following a phased approach — discover, define, implement, and monitor — teams reduce AI-related risks, meet compliance expectations, and create a foundation for responsible AI at scale.

The organizations that act now will be best positioned to innovate safely as AI continues to reshape how work gets done.

The next step is simple: start governing your AI stack with a framework — and the right platform — built for modern enterprises.

Frequently Asked Questions

1. What is an AI governance framework?

An AI governance framework is a structured approach that defines how AI systems are approved, used, monitored, and audited within an organization to manage risk, compliance, and accountability.

2. Why is AI governance important for enterprises?

AI governance helps enterprises reduce risks such as data leakage, bias, hallucinations, and regulatory exposure while enabling responsible and scalable AI adoption.

3. How long does it take to build an AI governance framework?

A functional AI governance framework can be built in as little as 30 days using a phased approach focused on discovery, policy creation, controls, and monitoring.

4. What should be included in an AI governance policy?

An AI governance policy should include acceptable use guidelines, data handling rules, human oversight requirements, transparency expectations, and audit logging standards.

5. How do organizations monitor AI systems for risks?

Organizations monitor AI risks through usage tracking, access controls, audit logs, model performance reviews, and periodic governance reviews aligned with compliance requirements.

%201.svg)

.avif)

.avif)

.avif)

.png)