HIPAA Compliance Checklist for 2025

AI didn’t enter enterprises through strategy decks or procurement reviews. It entered through employees trying to move faster.

Someone used ChatGPT to summarize a contract. Another team experimented with an AI tool to analyze customer data. A third enabled AI features inside an existing SaaS app, without looping in IT or security.

This is how Shadow AI grows. Quietly. Rapidly. Outside governance.

In this blog, you’ll understand what Shadow AI really is, why it’s becoming a serious enterprise risk, why traditional tools fail to detect it, what a Shadow AI discovery platform actually does, and how CloudEagle.ai helps organizations regain AI usage visibility and control.

TL;DR

- Shadow AI is spreading through enterprises without IT or security visibility.

- Unapproved AI usage increases data leakage, compliance, and governance risk.

- Traditional security and SSO tools fail to detect embedded and non-SSO AI tools.

- A Shadow AI discovery platform provides visibility, usage context, and risk classification.

- CloudEagle.ai helps organizations discover, assess, and govern Shadow AI at scale.

1. What Is Shadow AI?

Shadow AI refers to the use of AI tools, models, or AI-powered features without formal approval, visibility, or governance from IT, security, or compliance teams. It includes both standalone AI tools and AI embedded inside existing SaaS applications.

Unlike traditional Shadow IT, Shadow AI introduces a new risk dimension. AI tools don’t just store data; they process, transform, and sometimes retain sensitive information through prompts, training, or logs.

According to a Microsoft survey, over 75% of employees now use AI tools at work, and more than half do so without informing IT. That gap between adoption and oversight is where Shadow AI thrives.

2. Why Shadow AI Is a Growing Enterprise Risk?

Shadow AI doesn’t look risky at first. Most tools are easy to access, inexpensive, and genuinely helpful. But at scale, unmanaged AI usage creates serious security, compliance, and governance blind spots.

A. Unauthorized AI adoption

Employees adopt AI tools independently because they remove friction. No tickets. No approvals. No waiting. That convenience is exactly what makes Shadow AI so widespread.

Gartner estimates that by 2026, nearly 80% of enterprises will experience uncontrolled AI usage, largely driven by employee-led adoption.

Common signs of unauthorized AI adoption include:

- Teams experimenting with AI tools without IT approval

- AI features are enabled inside SaaS apps by default

- Business units adopting tools on free or trial plans

Unauthorized adoption doesn’t stop innovation.

It turns innovation into unmanaged risk.

B. Data leakage and privacy risks

AI prompts often contain sensitive data, internal documents, customer information, financial numbers, or source code.

Once submitted, that data may be logged, stored, or processed outside corporate controls.

A Cyberhaven study found that nearly 30% of AI prompts contain sensitive or regulated data.

Shadow AI quietly expands the data exposure surface.

C. Compliance blind spots

Auditors are no longer asking whether organizations use AI. They’re asking how AI usage is governed, monitored, and controlled, especially in regulated industries.

Shadow AI creates gaps that are difficult to explain during audits. Tools may be undocumented, access unmanaged, and usage evidence unavailable.

Audit advisory reports show that over 40% of recent compliance findings involve undocumented tools or unmanaged access, many of which now include AI-powered applications.

You can’t govern what you can’t see.

3. Why Traditional Tools Fail to Detect Shadow AI?

Most enterprises already have security tools, IDPs, and SaaS management platforms. Yet Shadow AI continues to grow undetected. The reason is simple: these tools weren’t built for AI usage visibility.

A. Embedded AI inside SaaS apps

Many SaaS platforms now ship with built-in AI capabilities, summarization, copilots, assistants, and predictive features. These capabilities live inside the application, not as separate tools.

Traditional discovery tools often detect the SaaS app but miss:

- Whether AI features are enabled

- Which users are interacting with them

- What data flows through AI-powered workflows

As a result, AI usage hides in plain sight.

B. AI tools outside SSO

A large percentage of AI tools don’t integrate with corporate SSO. Employees sign up using personal emails, browser sessions, or free tiers.

SSO-based visibility stops working here.

Research shows 30–40% of SaaS and AI tools operate outside SSO visibility, making identity-based discovery incomplete.

C. No usage-level visibility

Even when tools are detected, most platforms stop at inventory. They show that a tool exists, but not how it’s used.

What’s often missing:

- Usage frequency and intensity

- Which teams depend on the tool

- Access levels and privileges

- Whether usage is experimental or operational

Knowing an AI tool exists isn’t enough.

You need to know who is using it, how often, and for what purpose.

4. What does a Shadow AI Discovery Platform do?

A Shadow AI discovery platform is designed specifically to surface, classify, and govern AI usage across the enterprise, regardless of how or where tools are accessed.

It goes beyond simple discovery and enables continuous AI usage visibility.

A. AI tool discovery

The platform identifies AI tools using multiple signals, including browser activity, login patterns, expense data, and SaaS environments.

This allows it to:

- Detect AI tools that never touch SSO

- Identify AI features embedded inside SaaS apps

- Surface tools adopted through free or trial plans

Discovery is the first step toward control.

B. Usage and access visibility

Once tools are discovered, the platform shows how AI is actually used across the organization.

It maps:

- Users and departments

- Access levels and privileges

- Frequency and consistency of use

This helps teams separate casual experimentation from business-critical dependency.

Visibility turns unknown risk into manageable governance.

C. Risk classification

Not all AI tools carry the same risk. A discovery platform classifies tools based on data sensitivity, access scope, and business context.

High-risk tools can be flagged for review, restriction, or replacement, while low-risk usage can be monitored safely.

Risk-based governance scales better than blanket bans.

5. How CloudEagle.ai Solves Shadow AI Discovery?

CloudEagle.ai addresses the challenge with a comprehensive, multi-layered discovery and governance approach that gives enterprises full visibility into all AI usage, whether sanctioned or hidden, and enables teams to manage risk systematically.

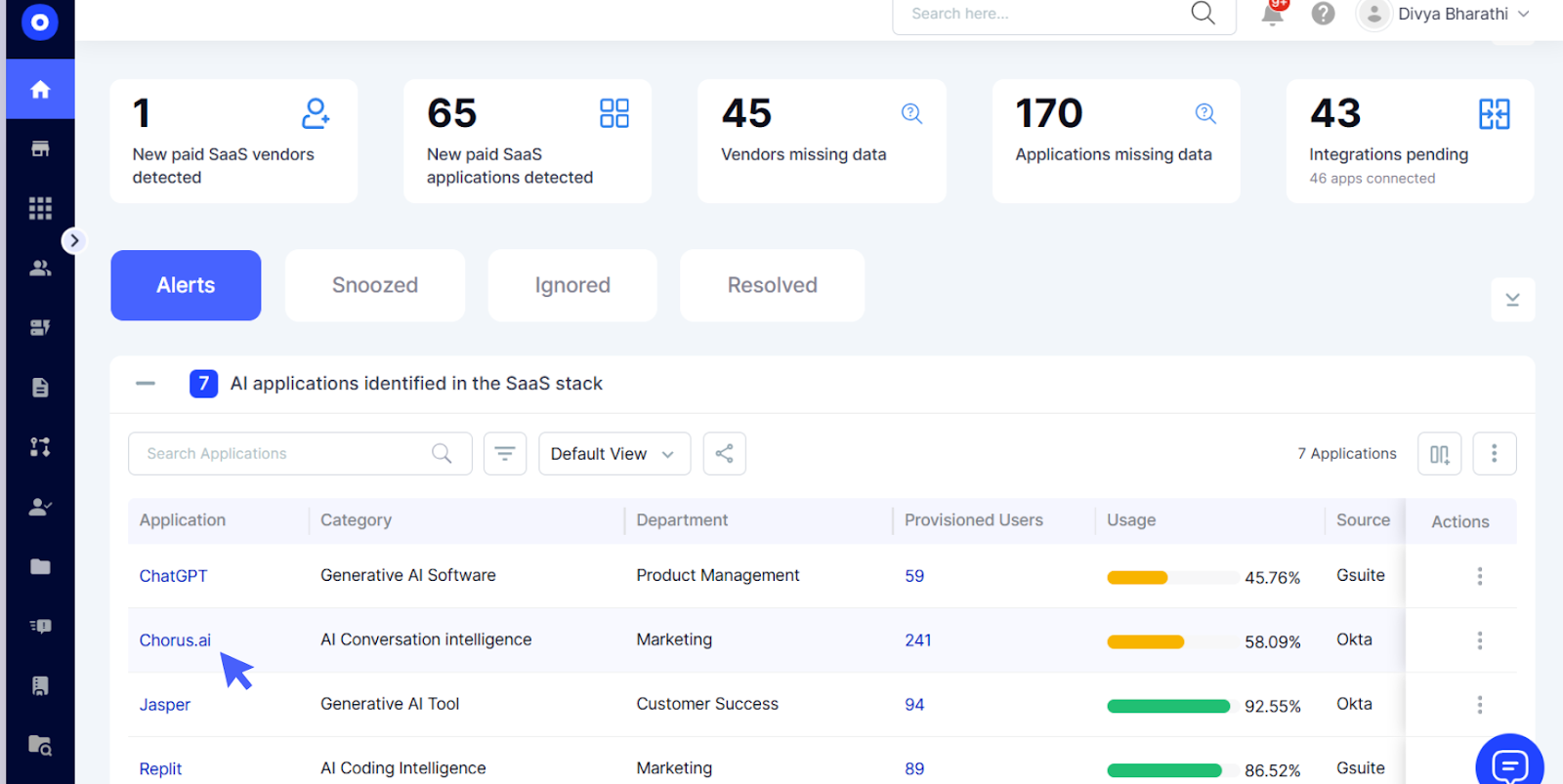

A. AI-Powered Detection Engine

CloudEagle uses smart discovery to detect:

- Standalone AI tools employees use independently (e.g., ChatGPT, Gemini, Claude).

- Embedded AI features inside existing SaaS apps (e.g., AI in Notion, Salesforce, HubSpot).

- Browser-based AI activity and AI extensions/plugins that bypass identity systems.

This goes beyond typical security tools by correlating identity, usage, and spend data to uncover AI tools that aren’t integrated into corporate identity systems.

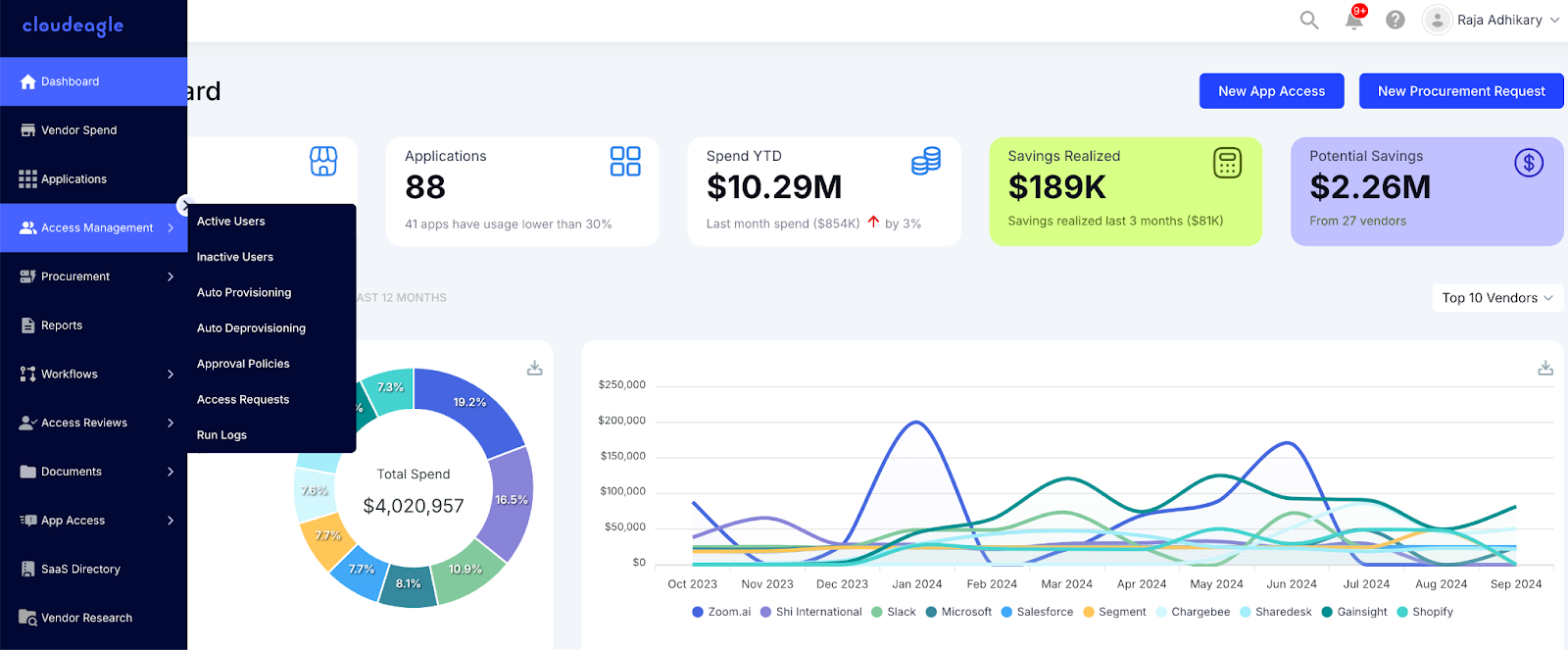

B. Unified AI & SaaS Inventory

CloudEagle builds a centralized inventory of:

- Shadow AI tools,

- Embedded AI features,

- External AI accessed through browsers.

This dashboard shows:

- Which users and departments are using AI?

- How frequently AI is used,

- Whether the AI usage is approved or risky,

- Which activities expose sensitive or regulated data?

Instead of relying on logs from Single Sign-On (SSO) alone, it stitches together signals from usage, spend, HR, and identity systems.

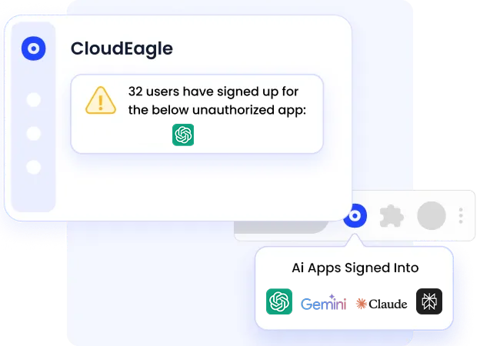

C. Continuous, Real-Time Monitoring

CloudEagle continuously watches for new or changing Shadow AI behaviors and:

- Triggers alerts when unapproved tools are discovered,

- Identifies anomalous patterns or risky AI usage,

- Detects browser-level or feature-level AI activities that wouldn’t show up in traditional IT logs.

This live visibility keeps governance up-to-date as AI adoption evolves rapidly.

D. Risk-Aware Classification & Context

Detected AI activity isn’t just flagged, it’s scored and enriched with:

- Risk context (e.g., whether sensitive data was involved),

- User and department metadata from HR systems,

- Usage patterns (how often AI is used and by whom),

- Vendor and contract details for spend correlation.

This means IT, Security, and Procurement get a risk-aware picture, not just a list of tools.

E. Governance & Enforcement Automation

Once Shadow AI is discovered and assessed, CloudEagle lets teams:

- Set policies and rules for approved vs. unapproved AI access,

- Automatically block or restrict high-risk tools or activities,

- Route approval requests to relevant stakeholders,

- Provide audit-ready logs and reports for compliance reviews.

Policies can be applied in real time, not just during periodic audits.

6. Final Thoughts

Shadow AI isn’t a future problem; it’s already embedded in everyday enterprise workflows. Employees will continue using AI tools because they deliver real value. The risk lies not in adoption, but in the lack of visibility and control.

Without a Shadow AI discovery platform, organizations operate with blind spots across data usage, access, and compliance. Traditional tools simply weren’t designed to detect or govern AI usage at scale.

CloudEagle.ai helps enterprises regain AI usage visibility, classify risk, and build practical AI governance without slowing innovation.

Book a free demo to see how CloudEagle.ai discovers and governs Shadow AI.

Frequently Asked Questions

1. What is Shadow AI in enterprises?

Shadow AI is the use of AI tools or AI features without formal approval, visibility, or governance from IT or security teams.

2. How can companies detect Shadow AI usage?

By using a Shadow AI discovery platform that analyzes usage, login, spend, and SaaS signals instead of relying only on SSO.

3. Why is Shadow AI dangerous for compliance?

Because it creates undocumented data flows, unmanaged access, and audit blind spots that regulators increasingly scrutinize.

4. Is ChatGPT Shadow AI?

ChatGPT becomes Shadow AI when it’s used for work purposes without approval, monitoring, or governance controls in place.

%201.svg)

.avif)

.avif)

.avif)

.png)