HIPAA Compliance Checklist for 2025

AI adoption is exploding across enterprises, but oversight isn’t keeping up.

According to McKinsey, only 21% of organizations feel adequately prepared to govern AI risk, even though nearly every team is rapidly experimenting with AI tools, copilots, and automated workflows.

That gap has made AI governance one of the most urgent priorities for CIOs, CISOs, and legal teams, because without clear policies, visibility, controls, and monitoring, AI becomes an unmanaged risk surface.

This guide breaks down what AI governance is, what an AI governance framework includes, the tools enterprises use, and the best practices to get it right.

TL;DR

- AI governance ensures AI delivers value without risking security, compliance, or trust.

- Frameworks must cover visibility, access controls, accountability, compliance, and risk scoring.

- Governance tools automate discovery, monitoring, approvals, and audit readiness.

- CloudEagle.ai closes execution gaps by detecting Shadow AI, enforcing controls, and simplifying access reviews.

- Enterprises that operationalize governance today innovate faster and stay ahead of regulatory pressure.

1. What Is AI Governance?

AI governance is the set of policies, controls, processes, and oversight mechanisms that ensure AI tools are used responsibly, securely, and in compliance with regulations.

It defines how organizations manage:

- AI usage and visibility

- Data privacy and security

- Access controls and accountability

- Ethical and bias considerations

- Accuracy and reliability of AI outputs

- Compliance with standards like GDPR, SOC 2, ISO, HIPAA

- Risk around Shadow AI and unmanaged tools

In short, AI governance ensures that AI delivers business value without compromising security, compliance, or trust.

2. What Are the Key Components of an AI Governance Framework?

A strong AI governance framework brings structure, visibility, and control to an otherwise unpredictable technology category.

a. AI usage visibility

Before you control AI, you have to see it. Most companies don’t actually know which AI apps their teams are quietly experimenting with, which opens the door to Shadow AI, unmanaged tools acting without IT awareness.

A governance framework must provide:

- A complete inventory of AI tools

- Visibility into user-level activity

- Identification of unapproved or risky tools

- Monitoring of AI embedded inside existing SaaS apps

If you don’t know what’s being used, you can’t govern or secure it.

b. Access controls and permissions

AI runs on data, and that makes it dangerous when access isn’t tightly managed. When tools touch contracts, code, customer records, or HR data, you need guardrails, not good intentions.

Governance requires:

- Role-based access controls

- Multi-factor authentication

- Limitations on who can use which AI tools

- Guardrails around data inputs and outputs

This minimizes unauthorized access, misuse, and costly leaks.

c. Model accountability and evaluation

Once organizations start training or tuning their own AI models, accountability becomes non-negotiable.

You need more than performance metrics; you need proof of how it works and how it evolves.

Governance should include:

- Evaluation frameworks to measure accuracy, bias, and drift

- Documentation of training data sources

- Versioning and model lineage

- Approval workflows for model deployment

Enterprises must understand how a model behaves, why it behaves that way, and what changed between versions.

d. Regulatory and compliance alignment

AI risk is increasingly compliance risk, and regulators aren’t taking a hands-off approach.

Laws now expect firms to demonstrate defensibility, not just say they’re compliant.

A governance framework must ensure alignment with:

- GDPR data processing rules

- SOC2 control requirements

- ISO 42001 (the new AI management standard)

- HIPAA and PCI for regulated industries

- AI-specific regulations are emerging globally

Regulators increasingly expect companies to prove AI compliance, not assume it.

3. What Are AI Governance Tools?

Even with policies defined, enforcement at scale isn’t humanly possible. AI governance tools fill this gap; they give enterprises automation, discovery, tracking, and audit mechanisms that ensure rules are followed in real life.

a. AI risk assessment tools

Before approving an AI system, organizations need to know what they’re getting into. Risk assessment platforms surface the hidden exposure behind AI usage by evaluating.

- Data sensitivity

- Third-party vendor risk

- Model reliability and bias

- Exposure risks from Shadow AI

They help teams identify and prioritize the riskiest tools and scenarios.

b. AI policy & compliance platforms

Policies don’t enforce themselves; that’s where compliance systems step in. These platforms operationalize governance by managing:

- AI usage policies

- Approval workflows

- Data handling rules

- Documentation for auditors

They keep human oversight aligned with AI growth instead of chasing it.

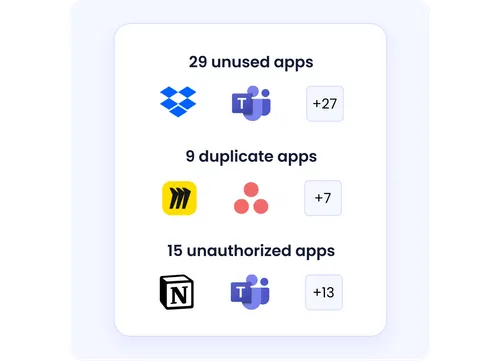

c. AI monitoring and tracking solutions

Governance needs more than static documents; it needs always-on visibility. Monitoring tools give that by discovering and tracking AI usage across the enterprise:

- AI app discovery

- Usage tracking and access logs

- Spend monitoring

- Compliance alerts

- Drift detection for custom models

These tools act as a real-time “AI visibility platform” that reduces blind spots.

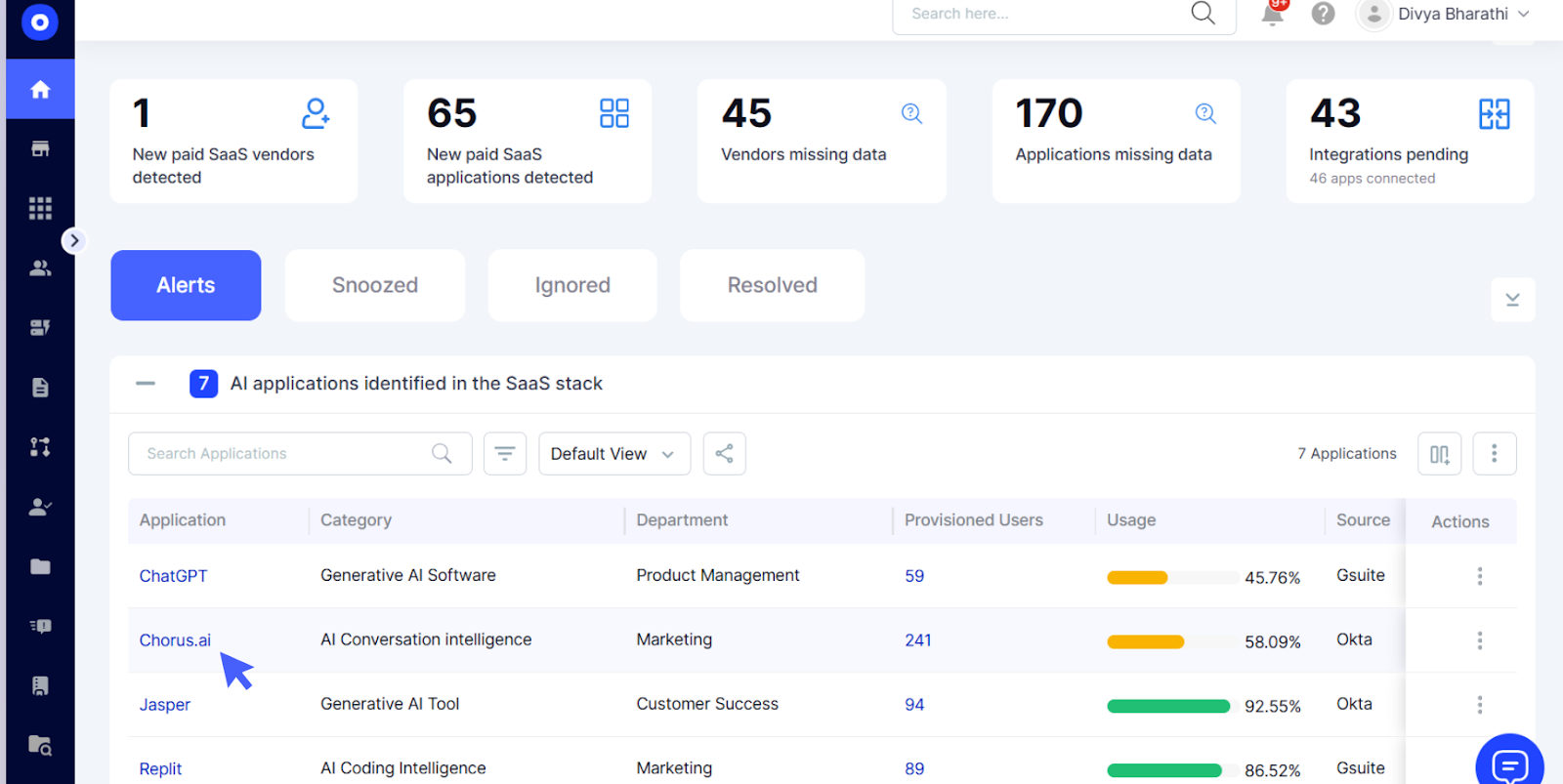

4. How CloudEagle.ai Supports AI Governance?

AI governance sounds great in theory, but most companies struggle to execute it because they lack visibility, enforcement, and accountability.

CloudEagle.ai solves this gap by giving IT, Security, and Procurement one command center to monitor, govern, and control every AI tool, whether it’s free, employee-expensed, embedded inside SaaS, or completely unsanctioned.

Key Capabilities for AI Governance

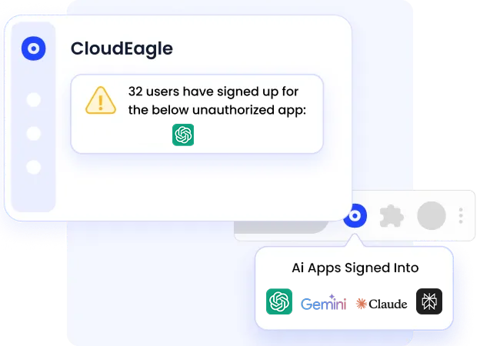

a. AI Tool Discovery & Shadow AI Detection

AI governance begins with discovery, because you can’t secure what you can’t see.

CloudEagle.ai automatically reveals every AI tool employees log into, even those outside SSO or bypassing IT entirely.

It does this by analyzing login data, browser traffic, expense logs, and corporate card transactions, perfect for detecting Shadow AI, which today represents nearly 60% of AI/SaaS usage without IT awareness.

b. AI Risk Scoring & Policy Enforcement

Once visibility is achieved, the next step is risk differentiation. CloudEagle.ai doesn’t treat all AI tools equally, because they aren’t.

It automatically evaluates each app based on:

- Data sensitivity

- User roles (admin/user)

- Usage behaviors

- Department context

- Integration and compliance risk

This enables risk-based AI governance, helping teams enforce rules that scale without slowing innovation.

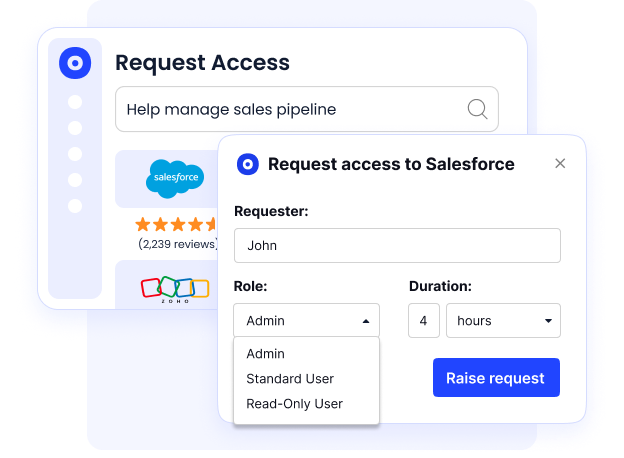

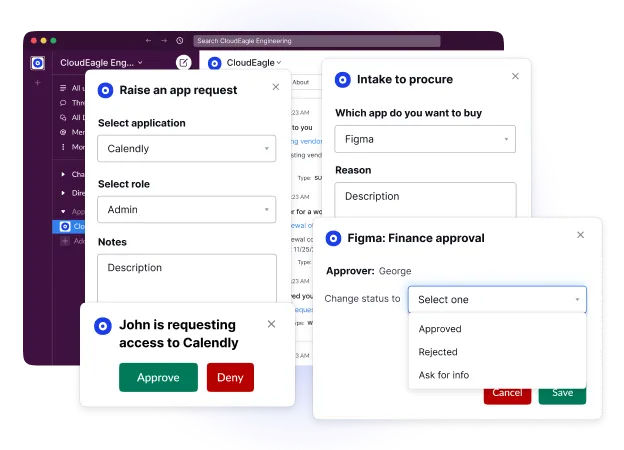

c. Centralized App Request Catalog for AI Tools

Instead of employees signing up for AI tools ad hoc, CloudEagle.ai brings structure with a self-service catalog.

Here, requests follow controlled flows:

- Role-based approvals

- IT/Security/Legal review

- Policy validation

It creates friction where needed, without blocking productivity, so AI apps aren’t adopted blindly.

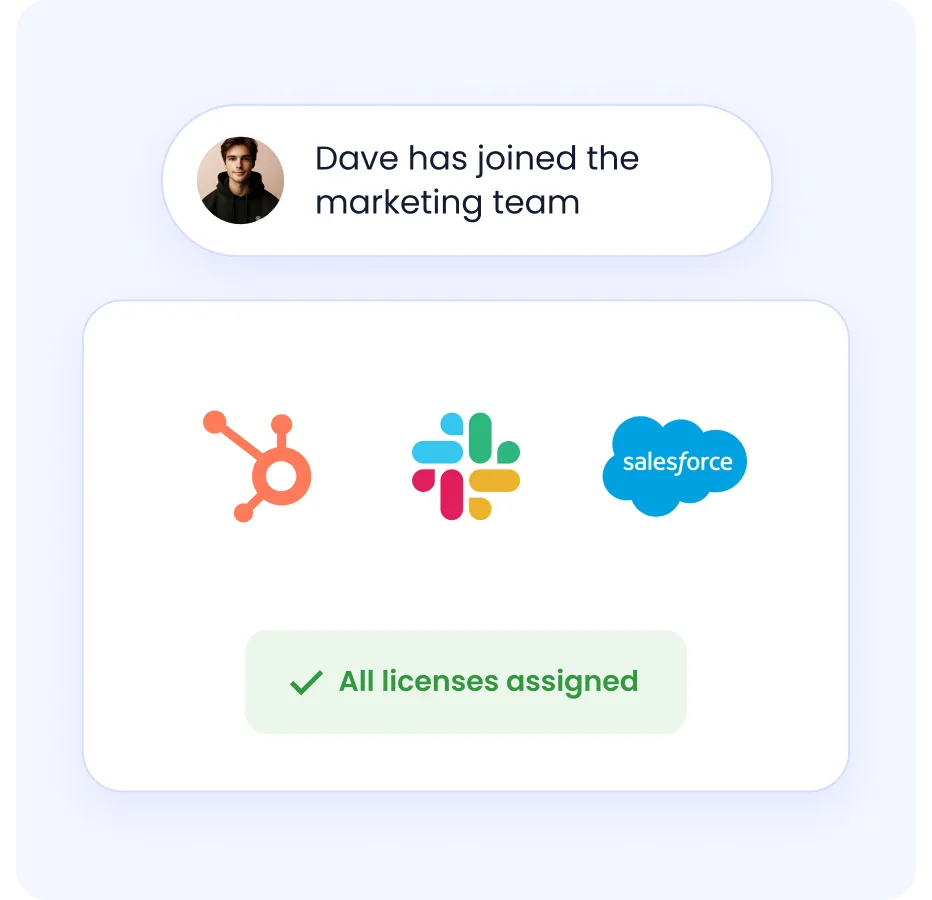

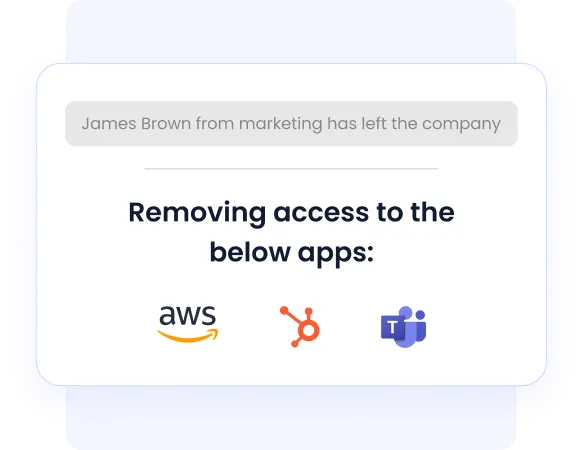

d. Automated Provisioning & Deprovisioning for AI Applications

Access control shouldn’t break just because an app isn’t behind Okta or SailPoint.

CloudEagle.ai provisions and deprovisions AI tools automatically, including unmanaged, direct-login apps, revoking access, removing stale accounts, and logging approvals.

With 48% of ex-employees retaining access to apps, this automation becomes non-negotiable.

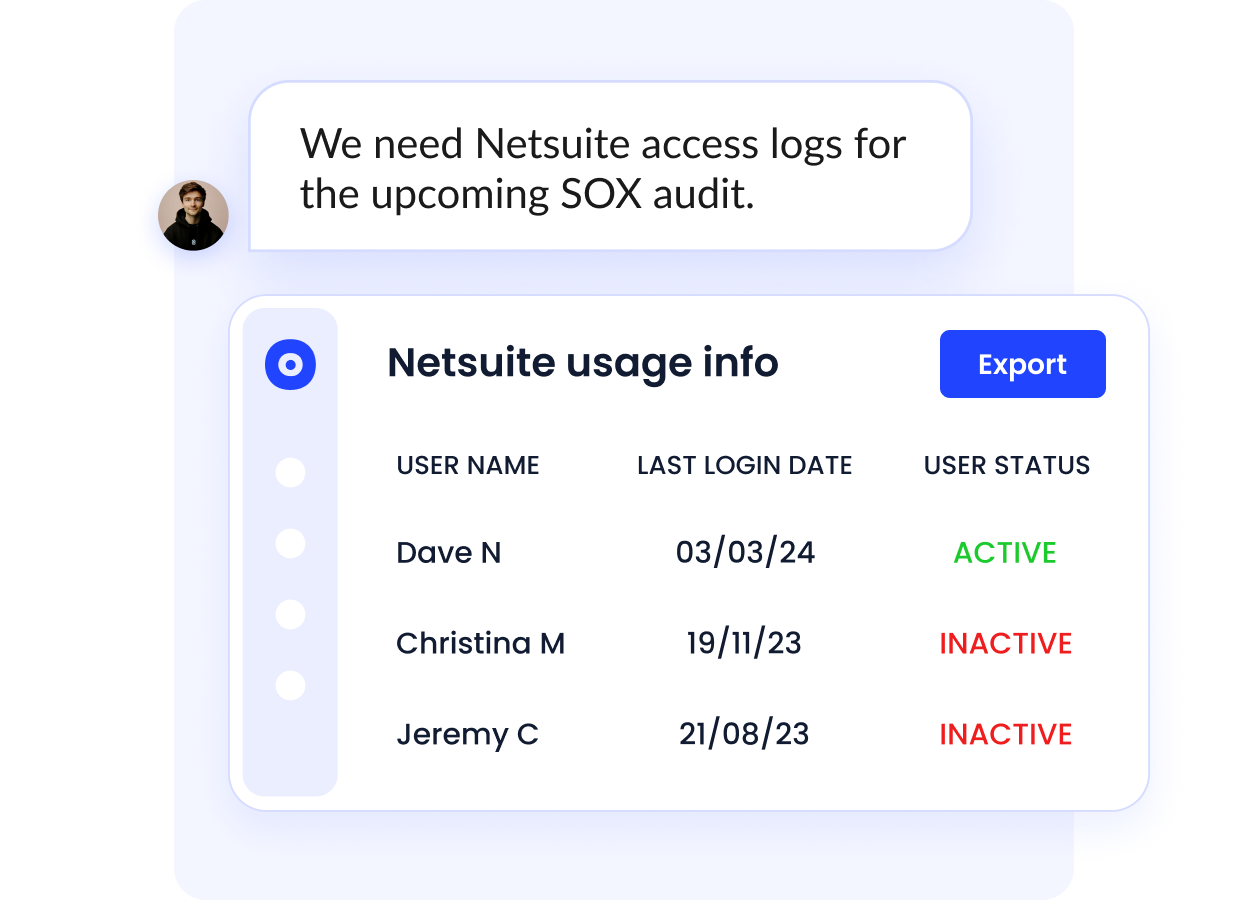

e. Continuous Access Reviews for AI Tools

Governance isn’t a one-time cleanup; it’s continuous oversight.

CloudEagle automates certifications for every AI tool, detecting:

- Overprivileged access

- Misuse or privilege creep

It generates SOC2-ready audit logs, eliminating manual spreadsheets, a pain point for 95% of companies still performing access reviews manually.

f. Audit-Readiness for AI Usage

Governance matters most when auditors knock.

CloudEagle.ai provides:

- Full audit trails

- Approval and access logs

- Evidence for SOC 2, GDPR, ISO, and emerging AI standards

It transforms AI oversight from reactive to defensible.

5. What are AI Governance Best Practices?

Strong AI governance isn’t just about tools, it’s about creating alignment, automating oversight, and ensuring every AI decision supports responsible growth.

a. Build cross-department AI ownership

AI cannot be governed from one silo. The most resilient organizations treat AI ownership as a shared responsibility between:

- IT (visibility and security)

- Legal (risk, contracts, compliance)

- Finance (spend control)

- HR (people and data ethics)

- Business teams (value realization)

This alignment ensures risk mitigation isn’t slowing innovation, it’s enabling it.

b. Use automation for oversight & approvals

AI moves too fast for humans to manually govern.

Automation makes governance scalable by enforcing:

- Approval workflows

- Usage visibility

- Risk scoring

- Role-based access review

This keeps governance proactive, not bureaucratic.

c. Track value, risk & business outcomes

AI governance shouldn’t just minimize risk; it should maximize ROI. Organizations should continuously measure:

- Spend vs. value

- Productivity/game-changing outcomes

- Error reduction

- Compliance posture

- Shadow AI reduction

This helps CIOs justify investment and evolve their governance strategy.

6. Conclusion

AI adoption is surging, often without controls, making governance a top priority for CIOs and risk leaders. Enterprises need frameworks that deliver visibility, accountability, and defensibility. The sooner governance begins, the less exposure organizations face.

A structured model lets teams balance productivity with safety, tracking usage, enforcing access, aligning with compliance, and evaluating model behavior. With automation in place, enterprises reduce manual burden and make governance continuous, not reactive.

CloudEagle.ai closes the execution gap by detecting Shadow AI, enforcing approvals, automating access reviews, and generating audit-ready evidence across every AI tool. It helps teams govern AI responsibly without slowing innovation.

Book a free demo today because governance shouldn’t be guesswork. See how CloudEagle.ai makes it real.

7. FAQ

- What is an example of AI governance?

Requiring employees to submit access requests for AI tools, with review, approval, usage tracking, and periodic audits. This ensures accountability, data protection, and business justification before AI is used. - What are the six pillars of AI governance?

Accountability, transparency, fairness, security, compliance, and continuous monitoring. Together, they guide how AI is approved, used, measured, and controlled to ensure responsible and trustworthy outcomes. - What are the key principles of AI governance?

Responsible use, risk oversight, privacy protection, explainability, and alignment with ethical and business goals. These principles ensure AI decisions are traceable, justified, and safe for people and organizations. - What are the 4 pillars of AI?

Data, algorithms, compute infrastructure, and governance. Data fuels AI, algorithms process it, infrastructure powers it, and governance ensures it is controlled, ethical, and aligned with business value. - What are the 7 C's of AI?

Context, cognition, creativity, collaboration, communication, compassion, and control. They represent how AI perceives, reasons, creates, interacts, supports humans, behaves responsibly, and stays aligned with oversight.

%201.svg)

.avif)

.avif)

.avif)

.png)