HIPAA Compliance Checklist for 2025

Agentic AI is changing how enterprises work. Unlike traditional AI that only answers prompts, agentic AI can take actions on its own, complete multi-step tasks, interact with systems, and make decisions without human supervision.

This creates huge opportunities, but it also introduces new risks. Without the right controls, autonomous AI agents can access the wrong data, trigger unintended workflows, create compliance issues, or operate without proper oversight.

That’s why agentic AI governance is now essential.

Let’s explore what agentic AI governance is, why enterprises need it, the challenges involved, and best practices for managing autonomous AI safely.

TL;DR

- Agentic AI governance makes sure autonomous AI systems act safely, ethically, and only within approved limits.

- Traditional AI controls aren’t enough; agentic AI can take multi-step actions on its own, so it needs real-time monitoring, identity controls, and strict oversight.

- The biggest risks include low visibility, unpredictable actions, shadow AI agents, too many permissions, risky API activity, and unclear accountability.

- Best practices: least-privilege access, regular reviews, guardrails, risk scoring, audit logs, kill switches, sandbox testing, and real-time monitoring.

- CloudEagle.ai helps enterprises securely manage AI with automated controls, monitoring, and compliance.

What Is Agentic AI Governance?

Agentic AI governance is the framework of policies, controls, guardrails, and oversight mechanisms used to manage access to AI systems that can act autonomously, not just make predictions. Unlike traditional AI models that only generate outputs, agentic AI can take real actions, often across multiple applications and data environments.

Agentic AI systems are capable of:

- Planning and executing multi-step tasks

- Making decisions dynamically based on real-time inputs

- Triggering workflows across SaaS tools and internal systems

- Modifying data, files, or system settings

- Interacting with APIs, services, and external agents

- Acting independently without human prompts

Because these systems behave less like software and more like autonomous digital workers, they require stronger governance to ensure they remain safe, compliant, and controllable.

Effective agentic AI governance ensures that:

- AI agents operate within clearly defined boundaries

- Their permissions follow least-privilege principles

- All actions are tracked, explainable, and auditable

- They cannot escalate privileges or access sensitive data

- High-risk workflows require human approval or intervention

- Every autonomous decision complies with internal and external regulations

At its core, an agentic AI governance framework focuses on visibility, access control, accountability, and safety—so enterprises can leverage autonomous AI without introducing operational, security, or compliance risks.

What Are The Key Challenges in Agentic AI Governance?

The following are the key challenges in agentic AI governance:

1. Autonomy Risks: Agentic AI systems can make decisions without human intervention, which may lead to unexpected or harmful outcomes if not carefully controlled.

2. Accountability Gaps: When AI agents act independently, it becomes difficult to assign responsibility for errors, damages, or regulatory violations, creating legal and operational challenges.

3. Privacy & Compliance: Autonomous agents often require access to sensitive information, increasing the risk of violating privacy laws and regulatory frameworks such as GDPR, HIPAA, or CCPA.

4. Transparency Issues: Many autonomous AI models operate as “black boxes,” making it hard to understand or explain the reasoning behind their decisions, which complicates audits and stakeholder trust.

5. Data Quality & Bias: AI decisions are only as reliable as the data they are trained on. Biased, incomplete, or outdated data can result in unfair or inaccurate outcomes.

6. Security Threats: Elevated permissions for AI agents can be exploited by insiders or external attackers, potentially causing data breaches, system disruptions, or unintended actions.

7. Regulatory Uncertainty: AI laws and guidelines are evolving rapidly and vary across regions, making it challenging to ensure agentic AI systems remain fully compliant globally.

Why Agentic AI Requires a New Governance Approach?

Agentic AI fundamentally reshapes an enterprise’s risk landscape. While traditional AI models only generate outputs, agentic AI can act, decide, and trigger workflows across multiple systems—often without human supervision.

These systems can:

- Execute tasks inside SaaS applications

- Modify configurations or system settings

- Interact with finance, HR, IT, or operational systems

- Request, generate, or misuse credentials

- Trigger chained automations or external APIs

- Communicate and collaborate with other agents

- Use tools or resources without human approval

This level of autonomy introduces risks that traditional AI governance cannot manage.

Agentic AI Needs a New Governance Layer For:

1. Autonomous Decision-Making Creates Unpredictability: Unlike rule-based automation, agentic AI selects different actions each time based on its internal reasoning. This makes static review processes and manual checks ineffective.

2. AI Agents Operate Across Multiple Systems Without Central Oversight: Agents span SaaS apps, APIs, internal tools, and cloud environments. This creates cross-system risks that no single team or platform traditionally monitors.

3. Agents Often Require Elevated Access to Do Their Jobs: Without strict controls, they can over-privilege themselves or access sensitive data. One misconfigured permission can open a massive attack surface.

4. Speed Amplifies the Blast Radius of Mistakes: Agents can execute hundreds or thousands of actions in minutes. A small error, wrong file, wrong workflow, or wrong API can cause instant, large-scale damage.

5. Accountability Becomes Ambiguous: If an AI agent makes a harmful decision, who is responsible? Legal, compliance, and security teams need clear ownership and auditability.

6. Shadow AI Becomes Significantly Harder to Detect: Employees can deploy open-source agents or connect AI tools directly to SaaS apps. These agents run autonomously, outside the visibility of IT or security.

7. Compliance Requirements Are Evolving to Cover Autonomous Systems

New regulations (EU AI Act, SOC 2, ISO 27001, NIST AI RMF) now require:

- Clear documentation of AI decisions

- Access governance for agents

- Continuous monitoring of autonomous behavior

- Explainability and auditability

Traditional governance models built for static ML models cannot meet these demands.

Agentic AI Governance Frameworks: How They Work and Why They Matter?

An agentic AI governance framework is the structured set of policies, processes, and controls that ensures autonomous AI systems operate safely and within enterprise boundaries.

A modern framework generally includes:

1. Purpose & Policies: Defines where agentic AI can operate, data sensitivity limits, ethical/compliance rules, risk tolerance, and approval workflows. Sets clear boundaries to align autonomous agents with business goals and prevent misuse.

2. Permissioning & Access Controls: Uses RBAC, ABAC, JIT access, PAM, scoped APIs, and least privilege to ensure agents access only necessary resources. Context-aware permissions adapt dynamically to tasks or risks, preventing unauthorized actions.

3. Monitoring & Guardrails: Provides real-time logging, anomaly detection, policy enforcement, risk scoring, and kill-switches. Tracks agent behavior across planning/action/reflection loops to catch deviations early.

4. Data Governance & Privacy Controls: Classifies sensitive data, applies masking/anonymization, blocks PII/PHI, and logs all flows. Ensures GDPR/HIPAA compliance in autonomous data handling.

5. Operational Controls: Manages lifecycle with sandbox testing, deployment standards, incident response, change controls, and versioning. Prevents cascading failures through fail-safes.

6. Accountability Framework: Assigns RACI roles: owner, reviewer, approver, monitor, escalation paths. Eliminates responsibility gaps in multi-agent systems.

How CloudEagle.ai Helps With AI Governance at Scale?

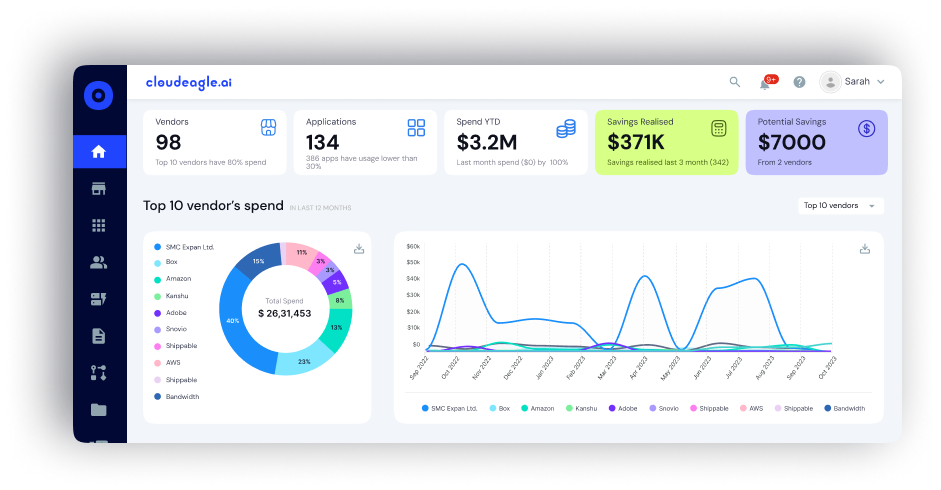

CloudEagle.ai delivers a unified identity and AI-governance control plane, enabling safe agentic AI use across enterprises. Employees activate AI agents only under controlled, compliant, least-privilege conditions. Real-time monitoring tracks agent behavior, system interactions, and permissions for responsible governance.

Using CloudEagle, organizations gain:

a. Centralized Identity & Access Governance

CloudEagle.ai provides a single platform to manage all users and AI agents across applications and tools. It gives a clear view of who has access to what, making it easier to enforce consistent policies. This reduces the risk of over-privileged accounts or shadow AI. Centralization also simplifies reporting and strengthens overall governance.

b. Automated Provisioning & Deprovisioning

CloudEagle.ai automates access management by giving employees the right AI tools when they join and removing access when they leave or change roles. This prevents errors from manual setup and ensures systems stay secure. Users only get access relevant to their responsibilities, keeping workflows efficient and safe.

Know how CloudEagle.ai helped Bloom & Wild streamline employee onboarding and offboarding.

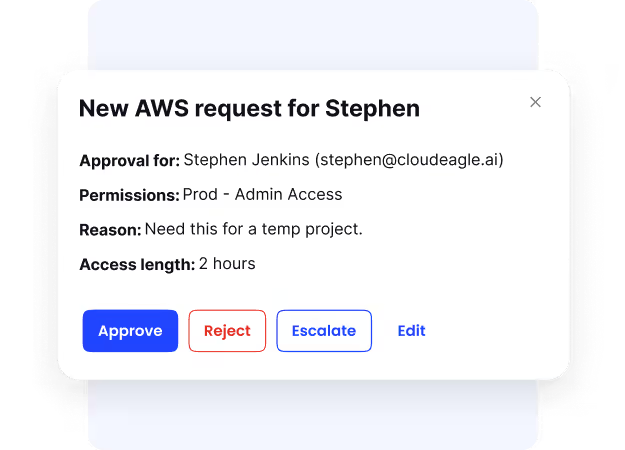

c. Just-In-Time Access for High-Risk AI Actions

For sensitive AI tasks, CloudEagle.ai provides just-in-time access only when it’s needed. Permissions are automatically revoked after the task is complete, reducing the risk of misuse or mistakes.

This approach enforces the “least privilege” principle and ensures high-risk actions are tightly controlled. It is ideal for administrative or critical AI operations.

d. Role-Based Access Controls (RBAC)

CloudEagle.ai lets organizations assign permissions based on roles, grouping users and AI agents according to responsibilities. Each role has predefined access, ensuring users only interact with tools and data relevant to their work. This reduces errors and simplifies administration.

e. Privileged Access Management (PAM)

High-level AI tasks are controlled through Privileged Access Management, so only authorized users can perform sensitive actions. All privileged activity is logged and monitored, and access is revoked when no longer needed. This reduces the risk of insider threats or mistakes. PAM ensures accountability and secure handling of critical AI functions.

f. Real-Time Monitoring & Risk Alerts for AI Activity

CloudEagle.ai continuously monitors activity across AI tools and users. It generates alerts for unusual or risky behavior, allowing teams to respond quickly. This real-time oversight helps prevent misuse or security risks. Continuous monitoring keeps AI operations safe and compliant.

g. Automated Access Reviews

CloudEagle.ai automates periodic access reviews of who has access to AI tools. It flags unnecessary or outdated permissions, helping prevent privilege creep. Managers can quickly approve or revoke access, ensuring everyone has only what they need. Automated reviews make governance easier and more consistent.

Discover how Dezerv automated its app access review process with CloudEagle.ai.

h. Continuous Compliance & Audit Reporting

CloudEagle.ai tracks all access and activity, making it easy to generate compliance reports. Organizations can quickly show who did what, when, and why. This supports audits and regulatory requirements, ensuring accountability. Continuous reporting keeps AI governance transparent and reliable.

Agentic AI Governance Best Practices for Enterprises

Managing autonomous AI agents requires a governance model far more rigorous than traditional AI oversight. Below are the essential agentic AI governance best practices every enterprise should implement to ensure safety, compliance, and controlled automation.

1. Enforce Strict Least-Privilege Access

Agentic AI must never receive broad or persistent access. Limit permissions using:

- RBAC (Role-Based Access Control)

- JIT (Just-In-Time) access for temporary privileges

- Scoped API credentials with minimal permissions

- Context-aware restrictions for sensitive actions

- Deny-by-default policies as the baseline

This reduces the risk of unauthorized actions, privilege escalation, or unintended data exposure.

2. Continuously Monitor All Agent Activity

Real-time visibility is a core requirement of AI agent governance. Monitor:

- Actions, triggers, and workflows

- Input and output patterns

- Agent intent and reasoning chains

- Privilege usage and abnormal behavior

- Sudden spikes in API calls or agent autonomy

Continuous monitoring ensures you can detect and stop risks before they scale.

3. Automate Access Reviews for Every AI Agent

AI agents require the same (or stricter) access validation as human identities.

Recommended cadence:

- Monthly reviews for high-risk or high-autonomy agents

- Quarterly reviews for all other agents

Automated access reviews help prevent privilege creep and stale credentials.

4. Require Sandbox Validation Before Deployment

Never deploy an agent directly into production. All autonomous systems must pass:

- Safety and alignment testing

- Scenario and failure-mode simulations

- Stress testing under varied conditions

- Compliance verification against internal and regulatory standards

This ensures agents behave predictably before they touch real systems.

5. Maintain Centralized Identity Governance for All Agents

Agent identities must be managed like human identities. Centralize:

- Provisioning & deprovisioning

- Credential rotation

- Agent lifecycle management

- Usage and access reconciliation

- Cross-app activity tracking

This prevents shadow AI agents and unmanaged credentials.

6. Enforce Kill-Switches for Immediate Shutdown

Every agent must be stoppable instantly. Kill-switch controls include:

- Immediate access removal

- Workflow termination

- Session destruction

- Action rollback or revocation

Kill-switches ensure rapid containment of harmful or runaway behavior.

7. Apply Strong Agentic AI Data Governance Controls

Define strict data boundaries for all agent interactions:

- What data may an agent access?

- Where can data be stored or processed?

- What data can be generated, shared, or transformed?

- PII masking and redaction

- Context-level data filtering

This prevents data leakage, compliance violations, and model misuse.

8. Establish Human-in-the-Loop (HITL) Oversight

High-risk workflows must require human intervention. Human checkpoints include:

- Approval steps

- Confirmation of sensitive actions

- Escalation routing

- Final authority over irreversible operations

This keeps autonomous AI aligned with business and compliance requirements.

9. Maintain Complete Audit Trails

Every agent interaction must be fully traceable. Log:

- Timestamps and session IDs

- System and data accesses

- Workflow steps taken

- Privilege elevation attempts

- API calls and model outputs

Auditability is essential for compliance, security, and forensic analysis.

10. Align Policies With Global Compliance Frameworks

Agentic AI governance must map to established frameworks, including:

- SOC 2 (security & access controls)

- ISO 27001 (information security management)

- GDPR (data privacy & lawful processing)

- HIPAA (health data protection)

- EU AI Act (risk-tiered AI governance)

- NIST AI RMF (risk management framework)

Aligning policies ensures agent behavior meets legal and regulatory obligations.

Risk Mitigation Strategies for High-Autonomy AI Systems

Agentic AI can automate complex work, but it also introduces new risks. The following strategies help enterprises keep autonomous agents safe, controlled, and aligned with business rules.

1. Role-Based & Task-Based Privilege Segmentation: Give each agent only the permissions it needs for a specific job. Create narrow, task-focused roles so an agent can’t access systems or data beyond its purpose. This reduces the risk of accidental or harmful actions.

2. Real-Time Identity-Aware Governance: Tie every agent action back to a clear identity and authorization rule. This ensures you always know which user, which system, or which integration triggered the agent’s actions. It increases accountability and stops unauthorized behavior instantly.

3. Data Minimization: Limit the data an agent can access. Only provide the information required for each task; nothing more. This prevents unnecessary exposure of sensitive data and reduces the impact if something goes wrong.

4. Continuous Compliance Validation: Use automated checks to ensure agents always follow security and compliance rules. These checks look for incorrect permissions, policy violations, or agents that have more access than they should. This keeps your environment safe day-to-day, not just during audits.

5. Automated Alerting & Action Revocation: Set up automated alerts so that the moment an agent behaves suspiciously, the system can pause it or remove its access. This stops potential incidents before they spread and protects sensitive information.

6. Strict Vendor & SaaS Integration Governance: Apply your governance rules to external AI vendors and SaaS tools as well. Make sure third-party agents follow your identity, data, and access control policies. This prevents outside tools from becoming a security gap.

7. Multi-Layer Guardrails: Use multiple layers of protection such as input filtering, output checks, and workflow limits. These guardrails help keep agents on track and prevent unintended actions, even when tasks are complex.

Hear from Jeremy Boerger, founder of Boerger Consulting and creator of the Pragmatic ITAM Method. He shares 20+ years of experience on SaaS governance, breaking down silos, and building IT organizations that are resilient, collaborative, and data-driven.

Conclusion

Agentic AI can unlock massive productivity and innovation, but without the right governance, it also amplifies risk. When AI agents can make decisions, trigger workflows, and touch sensitive data on their own, enterprises need more than traditional AI controls.

The only scalable way to manage agentic AI safely is to bring policy, identity, and continuous monitoring together under one governance layer.

CloudEagle.ai helps enterprises do exactly that by centralizing identity governance, enforcing least-privilege and JIT access, continuously monitoring agent activity across SaaS apps, and automating access reviews and approvals.

If your organization is planning to adopt or scale agentic AI, now is the time to build the governance foundations that protect your data, keep you compliant, and maintain trust.

Ready to govern agentic AI with confidence?

Schedule a demo with CloudEagle.ai and see how you can gain full visibility and control over AI agents across your SaaS stack.

FAQs

1. What makes agentic AI more risky than traditional AI models?

Agentic AI can take independent actions, trigger workflows, access systems, and make decisions without human oversight—creating new risks around autonomy, excessive permissions, and unpredictable behavior.

2. What governance controls are needed to manage autonomous AI agents?

Key controls include RBAC, JIT access, PAM, continuous monitoring, data governance rules, kill-switches, sandbox testing, activity logging, and lifecycle governance.

3. How do organizations prevent AI agents from taking harmful or unauthorized actions?

By enforcing least privilege, monitoring actions in real time, applying guardrails, restricting API permissions, and setting up policy-based action boundaries.

4. What are the best practices for safely deploying agentic AI in enterprises?

Implement sandbox testing, automated access reviews, privilege segmentation, continuous monitoring, centralized identity governance, and strong data controls.

5. How can businesses audit or track the actions of autonomous AI systems?

Use audit logs, privileged access monitoring, real-time behavioral monitoring, risk scoring, and automated compliance reporting.

%201.svg)

.avif)

.avif)

.avif)

.png)